Augmenting a Laser Pointer with a Diffraction Grating for Monoscopic 6DOF Detection

DOI:

https://doi.org/10.20385/1860-2037/4.2007.14Keywords:

6DOF detection, Virtual Environment, diffraction grating, interaction, laser pointerAbstract

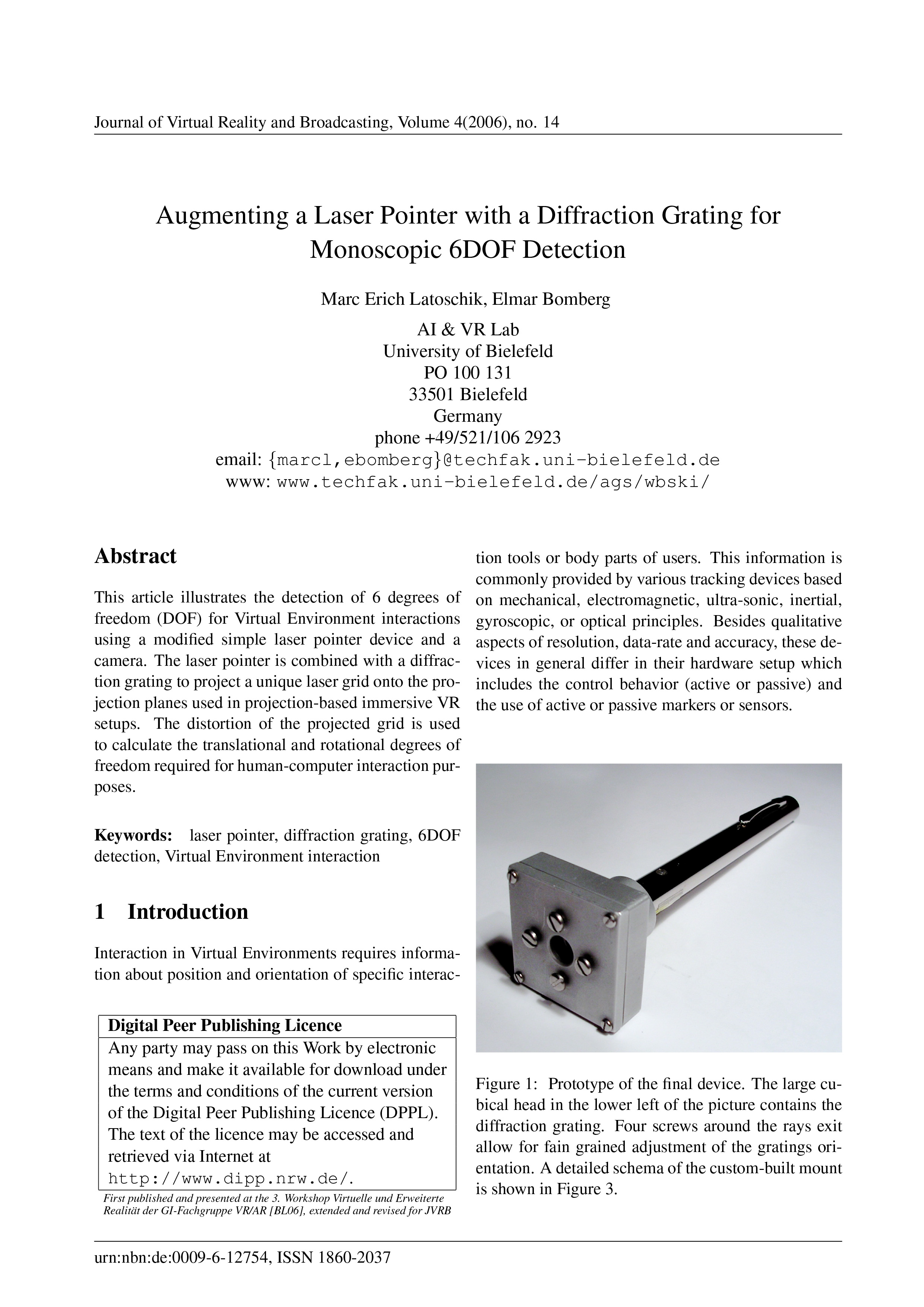

This article illustrates the detection of 6 degrees of freedom (DOF) for Virtual Environment interactions using a modified simple laser pointer device and a camera. The laser pointer is combined with a diffraction rating to project a unique laser grid onto the projection planes used in projection-based immersive VR setups. The distortion of the projected grid is used to calculate the translational and rotational degrees of freedom required for human-computer interaction purposes.

Published

2008-01-22

Issue

Section

GI VR/AR 2006