No section

Investigations into Velocity and Distance Perception Based on Different Types of Moving Sound Sources with Respect to Auditory Virtual Environments

urn:nbn:de:0009-6-38009

Keywords: Spatial Hearing, Auditory Scene Analysis, Auralization, Moving Sound Source, Doppler shift

Keywords: Spatial Hearing, Auditory Scene Analysis, Auralization, Moving Sound Source, Doppler Shift

SWD: Psychoakustik, Räumliches Hören, Richtungshören

Abstract

The characteristics of moving sound sources have strong implications on the listener's distance perception and the estimation of velocity. Modifications of the typical sound emissions as they are currently occurring due to the tendency towards electromobility have an impact on the pedestrian's safety in road traffic. Thus, investigations of the relevant cues for velocity and distance perception of moving sound sources are not only of interest for the psychoacoustic community, but also for several applications, like e.g. virtual reality, noise pollution and safety aspects of road traffic. This article describes a series of psychoacoustic experiments in this field. Dichotic and diotic stimuli of a set of real-life recordings taken from a passing passenger car and a motorcycle were presented to test subjects who in turn were asked to determine the velocity of the object and its minimal distance from the listener. The results of these psychoacoustic experiments show that the estimated velocity is strongly linked to the object's distance. Furthermore, it could be shown that binaural cues contribute significantly to the perception of velocity. In a further experiment, it was shown that - independently of the type of the vehicle - the main parameter for distance determination is the maximum sound pressure level at the listener's position. The article suggests a system architecture for the adequate consideration of moving sound sources in virtual auditory environments. Virtual environments can thus be used to investigate the influence of new vehicle powertrain concepts and the related sound emissions of these vehicles on the pedestrians' ability to estimate the distance and velocity of moving objects.

Auditory distance and velocity perception of moving sound sources is of great importance in many everyday scenarios. Due to the limited visual field, humans quite often have to rely on the auditory channel in order to avoid collisions, e.g. when participating as a pedestrian or as a cyclist in road traffic [ Ker06 ]. In complex traffic situations different kinds of sound sources (e.g. trucks, motorcycles, passenger cars) with different spectral and temporal characteristics can be perceived. Thus, it seems surprising that the human distance and velocity perception mechanisms have not yet been examined in great detail for different types of moving sound sources. Only a few studies are available in literature, most of them employing artificial signals as stimuli and thus only considering some of the relevant cues.

When vehicle types with new powertrain concepts like hybrid or electric vehicles (HEV/BEV) have to be considered, a proper simulation of moving sound sources can be especially helpful to predict their implications on pedestrian safety. In the past, several applications in the field of virtual auditory environments were realized for which the distance perception of moving sound sources plays an important role [ Str98b, Str98a ], e.g. in driving or racing simulators.

It is a well-known fact that the listener's hearing experience dominates distance perception of stationary sound sources (cf. [ Col62, Col68 ]). Both air absorptionand sound pressure level are considered as the most relevant factors contributing to the localization of sources in medium and large distances under free-field conditions or environments with a low amount of reverberation (e.g. exterior environments). When the perception of non-stationary sound sources is examined, an analysis of the relevant distance perception cues is far more complex than in the examination of stationary sources. Here, both temporal changes of the localization cues (e.g. Doppler shift, monaural sound pressure level, HRTF resp. ITDs and ILDs) take effect.

In one of our previous studies, that was based on the typical scenario of a passing passenger car, we investigated which of the various cues are the main contributors to the distance and velocity estimates of the subjects. The recordings of a passing passenger car with various vehicle speeds and microphone distances were presented dichotically and diotically to the subjects in three psychoacoustic experiments (cf. [ PS09, SP07 ]). However, it has not been examined in which way these influences vary for different types of sound sources. This is of specific relevance with respect to real world scenarios where a mixture of different types of moving sound sources is typically encountered.

Considering distance and velocity perception of new types of vehicles is an important issue when proceeding towards electromobility. Estimating the implications of these new vehicle sounds might help to prevent accidents caused by misinterpreting their velocities and the distances. The following key questions shall be addressed in the following:

-

Which cues dominate the perception of distance and velocity of moving sound sources?

-

How do the perceived velocity and the perceived distance vary for different types of moving sound sources in relation to their physical properties (e.g. true velocity and true distance)

The structure of this paper is as follows: first, a brief overview of human distance and velocity perception is given. Furthermore, the relevant literature is outlined shortly, whereafter the recordings of the moving sound sources which were presented to the test subjects are described. Subsequently, two psychoacoustic experiments are explained in detail; the first one analyses velocity perception, the second one investigates how distance perception is influenced depending on the type of the sound source and its acoustic properties. Moreover, several applications of moving sound sources in audio applications resp. virtual environments are discussed. Finally, it is described in which way a virtual audio environment system can be enhanced so that it allows the adequate simulation of moving sound sources and the performance of psychoacoustic research on this subject.

In the following section a brief overview on the distance and velocity perception of stationary and moving sound sources is given. A more detailed account of the relevant localization cues and the research in this field can be found in an essay by [ PS09 ]. The reader is encouraged to refer to this article.

Most of the research on distance perception has been performed in respect of stationary sound sources. Estimating the egocentric distance from a sound source is a task which seems to be very difficult for the subjects. Several investigations show that the distance blur is relatively high (e.g. [ vB49, Col68, MBL89 ]), while the directional localization blur is below 1º in the horizontal plane under static conditions [ Bla97 ] in comparison.

The cues which are commonly regarded to be relevant for the distance perception of sound sources are intensity, spectrum and direct-to-reverberant energy ratio (cf. [ ZBB05, Zah02 ]). In case of near-to- head sources dichotic cues are relevant as well [ Bla97 ]. Two more items might be of relevance with respect to psychoacoustic research in this field: Initially, [ SS73 ] and [ Hel99 ] showed that head rotations do not improve the accuracy of distance judgments in distance perception. Moreover, listeners tend to underestimate the true sound source distance from medium to high ranges, while in contrast the true distance is overestimated in case of nearby sources [ Zah02 ].

In case of non-stationary sound sources, additional cues affect distance perception: The sound pressure level and the influence of air absorption become time-variant, when the distance between the source and the receiver is altered. Furthermore, Doppler shift provides additional information for the listener regarding distance perception. Moreover, dichotic cues potentially gain more influence on distance perception, even for large distances. Binaural cues help to create a spatial impression of the scene and facilitate the estimation of the source's movement angle. Due to the source's movement, all static cues become implicitly time-variant and will be termed 'dynamic cues' in the following, as has been suggested by [ SL93 ]. The listener may use this additional information and may thus potentially enhance the accuracy of the estimate. Table 1 has been generated by [ PS09 ] in order to categorize the various cues that are relevant for distance perception and for the determination of the sound source velocity. Only a few psychoacoustic experiments dealing with the distance and velocity perception of moving sound sources have been described yet. Most of the studies were performed with artificial stimuli in a simulated environment. For an overview of the relevant literature, please refer to [ PS09 ]. Furthermore, it was shown by [ PS09 ] that the main contributor for the determination of distance is the maximum sound pressure level at the listener's position. However, dichotically presented sound sources were perceived to be closer than sound sources in the corresponding diotic situation which can be explained by the binaural masking level difference (BMLD). In case of the dichotic stimuli, it was assumed that the loudness difference between the moving source and the background noise increases due to binaural masking effects. Here, the source and the present environmental noise can be isolated more easily due to incoherent components that are contained in the ear signals. Hence, the improved separability of the source's signal from the background noise results in an increase of loudness and the source is thus localized in a lower distance, compared with the diotic case (cf. [ PS09 ]). Furthermore, a significant dependency between distance estimates and the velocity of the object was shown for a passenger car. This influence was clarified by the fact that the sound emissions of the passing vehicles, and thus the static sound pressure level at the position of the listener, increase towards higher velocities. Apart from the static sound pressure level at the listener's position no statistical significance of other dynamic cues on the perceived distance and velocity were proved (cf. [ PS09 ]).

However, as the study by [ PS09 ] has been performed only for one specific type of sound source, several open questions remain. The question whether the observed effects are of general nature or specific for the type of the vehicle could not be clarified, especially. In this article it is to be investigated whether systematic effects on the distance and the velocity estimates can be observed for vehicle types with totally different sound characteristics.

Table 1. Classification of the various auditory cues into 'diotic' (resp. monaural) and 'dichotic' (resp. binaural) cues vs. 'static' and 'dynamic' cues. In the following paragraphs, the terms 'dichotic', 'diotic', 'static' and 'dynamic' will be used in order to distinguish the different categories

|

diotic (monaural) |

dichotic (binaural) |

|

|

static |

sound pressure incidence direction: spectral colorizations due to air absorption (relevant for faraway sound sources) direct-to-reverberant energy ratio |

incidence direction: HRTF (φ, δ), respectively: interaural level differences (ILD) interaural time differences (ITD) (relevant for nearby sources) |

|

dynamic |

change of sound pressure level over time change of incidence direction over time: time resp. frequency scaling (pitch change) due to the Doppfer effect (S(τ, t)) changes in spectral colorizations due to air absorption (relevant for faraway sound sources) changes in direct-to-reverberant energy ratio |

change of incidence direction over time: HRTF (φ(t), δ(t)) respectively: ITD(t) ILD(t) |

Except for [ PS09 ] and [ Hel96 ] all of the investigations, which have been described in the previous section, made use of simulated scenarios or of synthetic stimuli. The psychoacoustic experiments presented in this article investigate the perception of sound source distance and velocity by applying a set of real-world measurements of a passing passenger car and of a passing motorcycle. Typically, the localization of moving sound sources is of practical importance with respect to the already mentioned scenarios - such as passing vehicles in traffic situations. In addition, the recorded scenarios can be used as a reference for the design and for the assessment of scenarios that have been created inside of a virtual environment.

The concept of using real-world recordings as stimuli within this study makes sense, since one of the key questions of this article is whether the results that have been found in [ PS09 ] can be generalized for different types of sound sources. In this context, the same recording setup has been chosen as in [ PS09 ] in order to avoid unintended effects on the experimental design and distortions of the subject's estimates.

Several conditions for passing vehicles were recorded in various distances between the vehicle and the microphones (resp. the listener) and with various velocities. Two types of microphones were used for the recordings, in particular one omni-directional microphone (Beyerdynamic MM1) for the diotic stimuli and one artificial head (Head Acoustics HMS III, ID-equalized, calibrated to 100 dB(SPL) ≡ 0 dBFS) for the dichotic stimuli. The microphone signals were recorded simultaneously by means of a multi-track recording front end at distances of 4 m, 8 m, 12 m, 16 m and 20 m and with vehicle speeds of 40 kph, 60 kph, 80 kph and 100 kph. For practical reasons, the distance between the microphones and the sideline of the public street has been set to the described values. As sound sources a passenger car (Opel Astra, service year - 1997, manual transmission, I4-Otto engine (1.6 l cylinder capacity)) and a motorcycle (BMW K1100RS, model year 1994) were used. However, in order to create almost stationary conditions of the exterior noise, the vehicles were driven in the most suitable gear with respect to the required vehicle speeds and without any gear changes. Furthermore, the particular vehicle and engine speeds were held as constant as possible for both vehicles. In case of the motorcycle, the engine speed was held as constant as possible at about 3500 rpm in all recordings. Figure 1 shows the recording setup. Please refer to [ PS09 ] for more details.

Figure 1. Recording Setup: The recordings of the passenger car and the motorcycle were performed at various distances (d1⋯5 ) and velocities (v1⋯5 ). For practical reasons, the distance between the microphones and the outer sideline of the driving track has been set to the annotated values. In addition it is not possible to define a reference point in order to measure the physical distance to the source, because the underlying vehicles feature several contributors, e.g. exhaust system, air induction system and tires. Thus, the sources are neither ideally omni-directional nor feature a spherical geometry like an ideal point source/breathing monopole.

In order to verify that the various conditions were recorded properly, several quantities such as spectrum, short-time spectrum and sound pressure level have been extracted from the audio signals. This was possible, because the digital amplitudes of the recorded signals were calibrated to a reference sound pressure level of 100 dB(SPL) which corresponds to an amplitude of 0 dBFS. Moreover, the extracted quantities facilitate conclusions with respect to dependencies between the physical properties of the sound sources and the results of the psychoacoustic experiments. The properties resp. the extracted quantities of the recordings of the passenger car and the motorcycle are compared in the following:

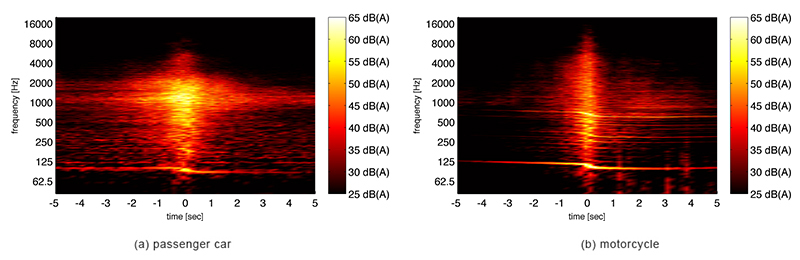

Spectrograms: As an example, the spectrograms for the passenger car and the motorcycle for condition v = 100 kph and d = 4 m are shown in Figure 2a and Figure 2b. Here, the Doppler shift - due to the translational movement of the vehicles - can easily be observed. Moreover, the differences in the sound character of the passenger car and of the motorcycle are shown.

The spectrogram of the passenger car's exterior sound shows strong contributions of broadband resp. stochastic components (e.g. tire noise) in addition to the harmonic components which correspond to the periodic engine order content that is typically emitted by the intake and exhaust system. In contrast, the spectrogram of the motorcycle's recording shows distinct harmonic components (engine orders) and much less stochastic content up to the higher frequency range. This corresponds well with the pronounced modulations of the time-envelope and thus with the much rougher sound character of the motorcycle.

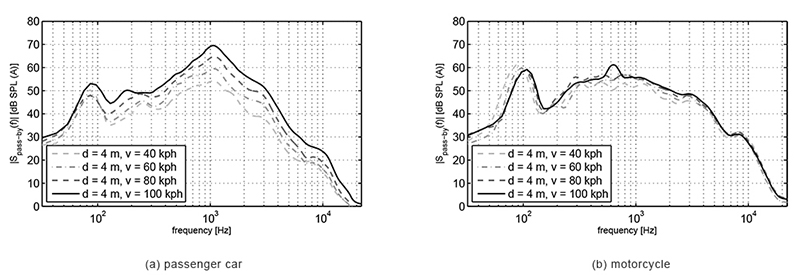

Magnitude Spectra (Pass-by-Situation): Figure 3a and Figure 3b show the magnitude spectra both for the recordings of the passenger car and of the motorcycle for various vehicle speeds (v1 , v2 , v3 and v4 ), but with a constant microphone distance of four meters. The spectral progressions have been calculated by means of a FFT-analysis, which was applied to a data window of approx. 750 ms (32768 data points, fs = 44.1 kHz). Here, the center of the extracted data window has been placed at the audio files' sample position which corresponds to the point in time when the vehicles passed the omni-directional microphone resp. the median plane of the artificial head. In addition, the extracted data vector was multiplied by a window function of the same length (von Hann-window) so as to minimize spectral leakage effects. In order to emphasize the envelope of the magnitude spectra and to show the relative differences between the recorded signals, the obtained FFT-bins were smoothed by using an adaptive third-octave sliding window.

It is remarkable that the progressions of the magnitude spectra of the motorcycle data only show a slight dependency on the vehicle speed. However, in case of v = 100 kph the spectrum shows a strong peak at about 600 Hz which is probably caused by dominant engine orders resp. due to changes in the operating conditions of the engine.

The analysis of the sound pressure levels was conducted as follows: For the calculation of the level-vs.-time curves a weighting filter with a time constant of 125 ms ('fast', exponential averaging) was chosen. In addition, the signals were processed with a spectral weighting filter (A-weighting curve) before the level-vs.-time curves were computed. The settings of the analysis follow a typical procedure for measuring sound pressure levels in noise pollution control, as for example suggested in the German standard DIN 4109 [ DIN89 ] and DIN-EN-61672-1 [ DE12 ].

Sound Pressure Level (Pass-by-Situation) The level-vs.-time curves were calculated both for the signals of the artificial head and of the omni-directional microphone. In case of the artificial head, the two level curves - which have been obtained for the ear microphones - were averaged sample by sample, which resulted in a single level-vs.-time vector. Furthermore, the maximum sound pressure levels (Lmax ) were extracted for the situation when the passenger car and the motorcycle passed the microphones.

The comparison of the extracted maximum sound pressure levels showed that two recordings in case of the omni-directional microphone (namely the measurements of the passenger car with the conditions v = 40 kph at d = 4 m and v = 60 kph at d = 8 m) were not correctly calibrated. Thus, it was not possible to use these stimuli within the experiments.

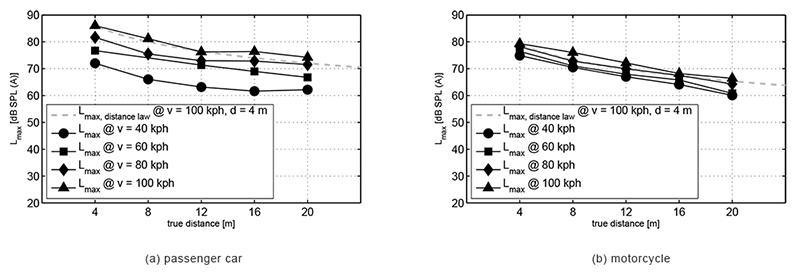

In Figure 4a and Figure 4b the influence of the vehicle's distance and velocity on the maximum sound pressure level (Lmax ) is shown for each vehicle. Here, the extracted values of the maximum sound pressure level do not exactly follow the distance law of an ideal point source under free-field-conditions as ground reflections interfere partly constructively and partly destructively. Furthermore, due to directivity and size, the vehicles cannot be considered as ideal point sources. Moreover, it is obvious that the influence of the velocity on the peak level is much weaker for the motorcycle than for the passenger car. An explanation for this might be that in case of the motorcycle mainly the engine sound influences the level, which is only slightly depending on the velocity (while the engine speed was held at approx. 3500 rpm, see above). For the passenger car the level is significantly influenced by tire noise, which depends much more on the velocity of the vehicle.

Figure 2. Spectrograms of the vehicles both passing at a distance of 4 m at a velocity of 100 kph. The analysis parameters have been chosen as follows: von Hann-window, window length: NFFT = 16384, time-overlap: L = NFFT/2, sample rate: fs = 44.1 kHz, spectral weighting = “A”.

Figure 3. FFT-magnitude spectra (third-octave-smoothed, A-weighted) of the passenger car (left) and the motorcycle (right) when passing at a distance of 4 m with various velocities. The analyzed signal frame had a window-length of about 750 ms (NFFT = 32768, Fs = 44.1 kHz).

Figure 4. Influence of true distance and velocity on the peak level (Lmax ) for the vehicles at the moment of passing. Here, the values of the peak levels have been retrieved from the level-vs.-time vectors by using the ear signals of the artificial head (energetically averaged), for the moment, when the vehicles passed the microphones. In addition, the theoretical curve for the peak level according to the distance law in case of a monopole source under free-field conditions is shown. Moreover, the curve was adapted to the peak level for the condition v = 100 kph and d = 4 m for the particular vehicle (dashed line).

Within this section, two psychoacoustic experiments on velocity and distance perception of linearly translating sound sources are described. Here, the results of the two experiments using various types of sound sources - namely a motorcycle and passenger car - are to be compared. Moreover, systematic influences - with regard to the physical properties of the stimuli on the velocity and distance estimates - are investigated and the salience of the available auditory cues are assessed.

The first experiment analyzes the influence of egocentric distance from a sound source on velocity perception. Here, the phenomenon of the so-called motion parallax can be observed as well. Furthermore, the influence of diotic cues (Doppler shift, dynamic and peak sound pressure level) and dichotic cues (interaural time differences and interaural level differences) on the velocity percepts is investigated.

The second experiment examines how distance perception coincides with the true distance for the situation, that is, when the vehicle passes the listener. Beyond that, the degree to which sound source velocity alters distance perception is going to be discussed.

According to the investigations of [ LW99 ], the Doppler shift dominates velocity perception. In contrast, dynamic changes of the interaural time differences and of the signal amplitude at the ear drums seem to be less important cues. In general, their experiments show that the Doppler cue gains perceptual salience for higher velocities and, therefore, becomes pre-dominant with respect to the remaining cues. This phenomenon can be explained by the increasing frequency changes (span and slew-rate), whereby the detection of pitch changes is facilitated. Experiment 1 investigates and compares the influence of the diotic cues (Doppler shift, sound pressure level and the influence of air absorption) and of the dichotic cues (interaural time differences and interaural level differences) on velocity perception.

In a previous study by [ PS09 ] it has been shown that an increase of distance results in a decrease of the perceived velocity. The mentioned effect is called motion parallax and can also be observed in visual depth perception (cf. [ Gol07 ]). In case of visual perception the distance of a moving object is determined on the basis of its velocity and its displacement on the retina (beside other cues): From the observer's perspective nearby objects seem to move faster than faraway objects (boundary condition: vsource = const.). An everyday situation where the effect of motion parallax occurs could, for instance, be the passing landscape, as soon as one looks out of a train's window. Furthermore, in [ PS09 ] it has been shown that for a passing passenger car binaural effects influence the estimation of velocity as well. Within this experiment the following questions are posed:

-

How does the perceived velocity of the sound source vary for different types of moving sound sources?

-

Which kind of influence do monaural cues (Doppler shift, sound pressure level) and binaural cues (interaural time difference, interaural level difference) have on velocity perception?

Method: Various psychoacoustic methods can be applied in order to determine the differences between diotic stimuli (recordings by means of the omni-directional microphone) and dichotic stimuli (binaural recordings), which have already been discussed in [ PS09 ] and shall not be repeated here. During the listening tests the recorded stimuli were presented either diotically or dichotically via headphones. The estimates of the vehicle's velocities were rated on a category scale by the subjects. Later, the results for dichotically and diotically presented recordings are compared and thus allow an identification of the influence of binaural cues.

Despite to the fact, that the headphone-presentation of real-world recordings shows inherent limitations, the authors have decided to use these as stimuli for the experiments: In contrast to simulated scenarios, the listener cannot utilize head rotations in order to avoid front-back confusions. Moreover, it is more difficult to create sound events which are localized outside of the head (externalized sources vs. inside-the-head locatedness (IHL), cf. [ Bla97 ]). However, the adequate simulation of such scenarios would be much more complex, compared with the real-world recordings. In particular, as soon as not only moving sources, but also the environmental resp. background noise, needs to be simulated properly.

Subjects and Stimuli: In the experiment the subjects were asked to quantify the velocity of the object. In the first section ('Experiment 1a'), 20 dichotic stimuli of a passenger car were presented in a pseudo-randomized order, which varied among the subjects. Each stimulus was presented repeatedly within the sessions. The data of 22 subjects aged from 22 to 41 was evaluated. In the second part ('Experiment 1b'), 20 diotic stimuli of a passenger car were presented. All other parameters of the experiment remained unchanged. This time the data of 22 subjects, aged between 20 and 33, was used for evaluation. The same procedure was repeated for the motorcycle. In section 1c (20 dichotic stimuli of a motorcycle), 18 subjects aged from 22 to 32 participated in the experiment and in section 1d (20 diotic stimuli based on the recorded motorcycle), the results of 20 subjects aged from 21 to 30 were collected.

A PC-based graphical user interface was used in order to acquire the judgments. The subjects rated the perceived velocity on a seven-point category scale (extrem langsam, sehr langsam, langsam, mittel, schnell, sehr schnell, extrem schnell) which was displayed on a monitor screen by means of setting a slider to the appropriate position. In addition, the subjects were also allowed to rate interim values between the given categories. The equidistance between the categories was underlined by the visual presentation as shown in Figure 5 as suggested by [ JM86 ] and [ Möl00 ].

Figure 5. Scale used in order to rate the perceived velocity of the stimulus (the English translation is shown here).

In the initial phase of each session two binaural anchor stimuli were presented to the subjects: One which was introduced as very slow (condition: d = 20 m and v = 40 kph) and one as very fast (condition: d = 4 m and v = 100 kph). These two conditions represented the ones which were rated as slowest or fastest in previous informal listening tests. The listening sessions using the recordings of the motorcycle and of the passenger car have been conducted separately from each other, whereby only the recordings of the particular type of vehicle were presented as anchor stimuli. Thus, there might be a shift in the rating scale between the ratings for the motorcycle stimuli and for the passenger car stimuli.

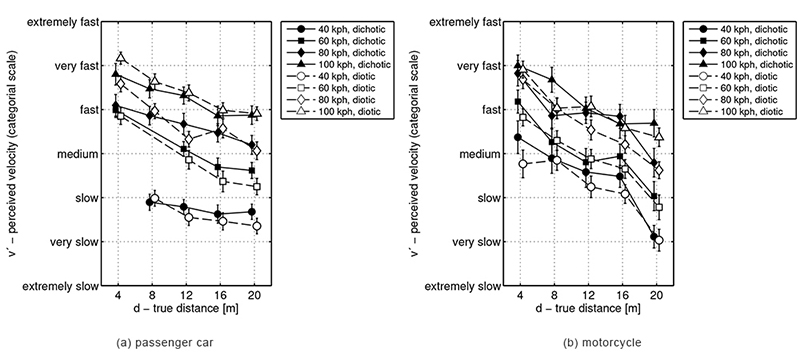

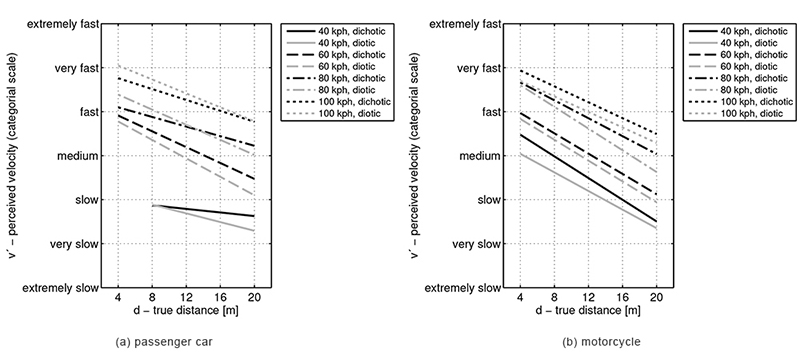

Figure 6a and Figure 6b show that the velocity estimates v' do not only depend on the particular velocity v of the source, but also on true distance d. The same trend can be observed for both vehicle types: With increasing distance to the sound source the vehicles are perceived as being slower (motion parallax).

However, it can be observed that the type of the sound source has a significant influence on velocity perception. Looking at the downward slopes of the regression analysis shown in Figure 7a and Figure 7b, it can be observed that this effect is stronger for the motorcycle than for the passenger car.

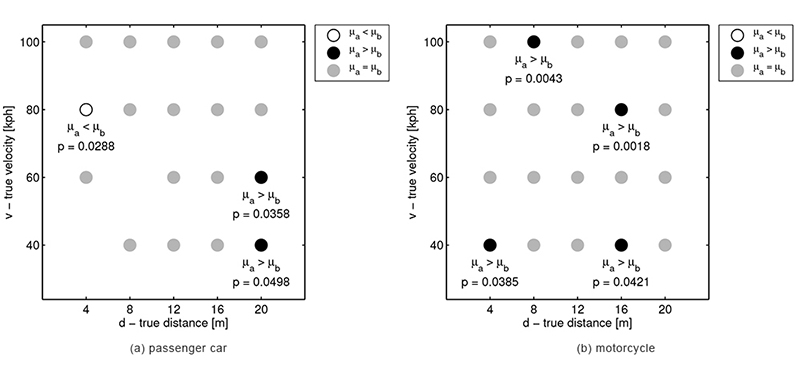

Furthermore, both figures show slight differences between the scores for diotic and dichotic presentation. In [ PS09 ], statistically significant differences between the ratings for diotic and the dichotic stimuli have already been observed in case of the passenger car. Here, significance between the diotic and the dichotic presentation has been tested for the motorcycle as well. An analysis of variance (ANOVA) has been applied to the data (cf. [ HEK09 ]) and the tendency of the differences was determined by means of an additional multiple pair comparison (cf. [ Beu07 ]). The results, which are shown in Figure 8a and Figure 8b, indicate that - both for the motorcycle and for the passenger car - there exists a significant but weak influence of the binaural cues. The weakness and the variation of the tendency might be caused by the fact that different cues dominate velocity perception with respect to the motorcycle and the passenger car. For example, due to the distinctive harmonic character of the motorcycle's engine sound, the Doppler shift can be detected much easier than in case of the passenger car. In contrast, changes of the maximum sound pressure level which depend on the true velocity are much stronger for the passenger car.

Figure 6. Influence of the true distance d of the sound source on the perceived velocity v'. Here, the average scores as well as the 95%-confidence intervals are shown in white for the diotic stimuli and in black for the dichotic ones. Two data points corresponding to the estimates based on the passenger car have been omitted due to a microphone calibration issue during the recordings (v = 40 kph at d = 4 m and v = 60 kph at d = 8 m).

Figure 7. Linear regression analysis: A downward slope of the regression lines for the perceived velocity v'' = β0 + β1d can be observed which seems to be stronger for the diotic stimuli (grey lines).

Figure 8. Results of the ANOVA: Significant differences between the velocity judgments v' over diotic and dichotic stimuli (P = 0.95 (confidence level); μa ≡ dichotic, μb ≡ diotic) for both vehicles. The probabilities p are given in addition, if the zero-hypothesis H0 has been rejected. In case of the passenger car, the results of the ANOVA corresponding to the conditions v = 40 kph at d = 4m and v = 60 kph at d = 8m have been omitted due to microphone calibration errors.

Table 2. Correlation coefficients of the regression analysis for the passenger car and the motorcycle data both for the idiotic and dichotic presentation.

|

|

v1 = 40kph |

v2 = 60kph |

v3 = 80kph |

v4 = 100kph |

|

R pass. car, dichotic |

-0.87 |

-0.97 |

-0.99 |

-0.96 |

|

R pass. car, diotic |

-0.92 |

-0.98 |

-0.91 |

-0.97 |

|

R motorcycle, dichotic |

-0.92 |

-0.93 |

-0.89 |

-0.97 |

|

R motorcycle, diotic |

-0.91 |

-0.98 |

-0.99 |

-0.94 |

This experiment investigates the way in which the distances of objects passing a listener are determined. For this purpose, various scenarios based on the recordings of the motorcycle and the passenger car were presented to the subjects and their particular distance estimates were evaluated. The scenarios varied with respect to the distance of the passing object and the velocity of the object. The following questions shall be addressed within this experiment:

-

How does the perceived distance correlate to the physical parameters of the scenario?

-

How do the perceived velocity and the perceived distance vary for different types of moving sound sources?

-

To what extent does the velocity of the object influence the perceived distance?

Method: Again, the recorded stimuli were conveyed via headphones. As in experiment 1, only slight differences between a diotic and a dichotic presentation were observed. As these differences are not the key questions of this experiment, only a dichotic presentation was chosen here. However, in [ PS09 ] the influences of a diotic versus a dichotic presentation have been analyzed. Thus, the stimuli were the same as in the first experiment.

Subjects and Stimuli: For the passenger car the results of 31 subjects, aged between 22 and 38 years, were evaluated, for the motorcycle 20 subjects, aged from 22 to 30 years, participated. In the experiment the subjects were asked to rate how they perceived the distance to the object when passing. By means of the described PC-based user interface the subjects rated the perceived distance on a seven-point category scale (sehr weit entfernt, weit entfernt, eher weit entfernt, mittel, eher nah, nah, sehr nah) as shown in Figure 9.

In the introductory phase of the particular sessions two anchor stimuli were presented to the subjects. The stimulus corresponding to a distance of four meters and a velocity of 60 kph was introduced as close, while the stimulus corresponding to d = 20 m and v = 60 kph was introduced as being distant. As in 'Experiment 1', the stimuli for the motorcycle and the passenger car were presented in different sessions of the experiment. Thus, two different anchoring stimuli were used in two sessions, which might result in a slight offset between the rating scales for the motorcycle and the passenger car.

Figure 9. Scale in order to rate the perceived distance when passing (the English translation is shown here).

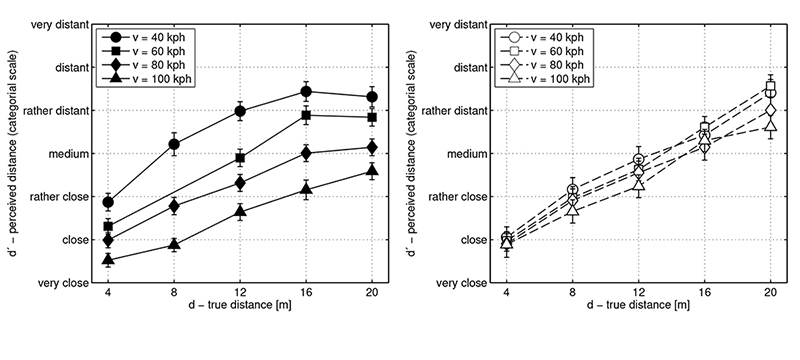

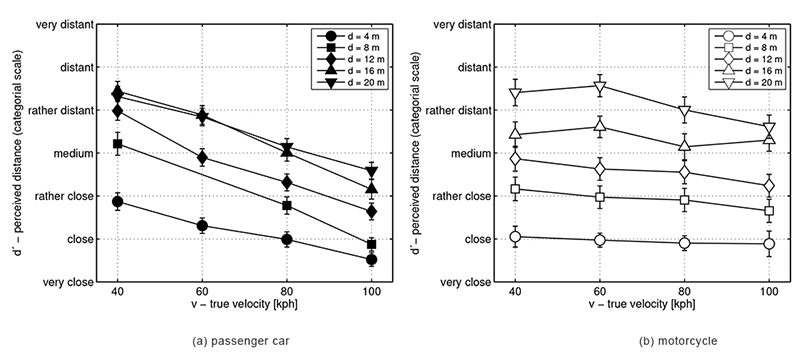

Results and Discussion: Figure 10 shows the average distance estimates d' versus the true distance d of the passing vehicle. The data points have been grouped after the actual vehicle speed forming four graphs. In case of the passenger car (Figure 10a) a significant offset between the curves for the different velocities is observable. In contrast to the ratings for the passenger car, the curves based on the motorcycle stimuli nearly overlay. Hence, in case of the passenger car the distance estimates significantly depend on the velocity of the object, which is additionally shown in Figure 11a. An increase of velocity causes the listeners to perceive the vehicle passing at a shorter distance. In [ PS09 ] it has been deducted (applying the stimuli of the passenger car) that this effect is mainly caused by the increase of the sound pressure level towards lower distances. With regard to the motorcycle this effect is much weaker. The perceived distance only slightly depends on true velocity.

However, by looking at the sound pressure levels of the motorcycle these results can be explained: In contrast to the passenger car the influence of velocity on sound pressure at the listener's position is far less distinctive (see Figure 11b). In order to demonstrate the dependency of the sound pressure level on the estimated distance, the maximum sound pressure levels at the moment of passing (Lmax ) were extracted from the recordings and compared with the distance judgments.

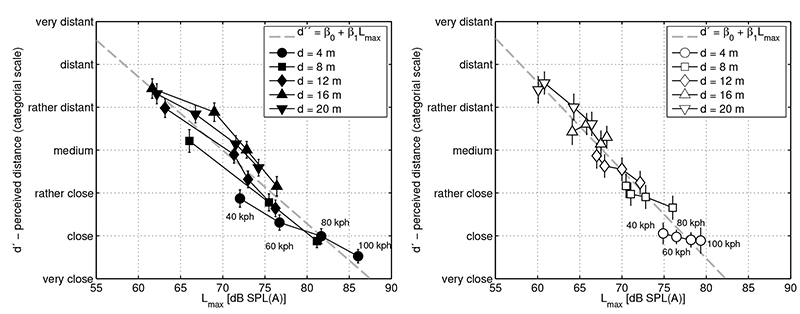

The perceived distances versus the maximum sound pressure levels are shown in Figure 12. The figure makes clear that both for the passenger car and for the motorcycle the main parameter for the determination of distance is the maximum sound pressure level at the listener's position. Additionally, the stimuli of the motorcycle vary for each specific distance much less than the ones of the passenger car. This corresponds to the peak level analysis which has been shown in Figure 4a and in 4b. In comparison to a passenger car, a totally different relationship between velocity and sound pressure level is given. Thus, it can be expected that the relationship between the estimated distance and the velocity of the vehicle is different as well.

Furthermore, a linear regression analysis was applied to the extracted sound pressure levels and the average distance estimates. In case of the passenger car, the regression coefficients equal β0 = 14.9 and β1 = -0.17. For the motorcycle, the regression analysis yields the coefficients β0 = 16.9 and β1 = -0.21. In order to verify the influence of the maximum sound pressure level as a main contributor to distance perception, a correlation analysis of the averaged ratings and the particular sound pressure levels was calculated. The correlation coefficients yield Ra = 0.95 for the passenger car and Rb = 0.97 for the motorcycle. Both conditions show a high correlation between sound pressure level and estimated distance.

Figure 10. Perceived distance d' vs. the true distance d of the source at the moment of passing. Shown are the averaged estimates as well as the 95%-confidence-intervals for the passing vehicles.

Figure 11. Perceived distance d' depending on the true velocity v. Shown are the average estimates as well as the 95-%-confidence-intervals for the passenger car and the motorcycle.

Figure 12. Influence of Lmax on the distance-estimates d'' in case of the passenger car and the motorcycle. Given are the mean values and the 95%-confidence-intervals. Additionally the result of a linear regression is shown. The coefficients of the regression line d'' have been calculated to β0 = 15 and β1 = -0.17 in case of the passenger car and β0 = 19.96 and β1 = -0.21 in case of the motorcycle. The correlation coefficients equal Ra = 0.95 (passenger car) and Rb = 0.97 (motorcycle).

Further research on human perception of moving sound sources is beneficial not only for scientific research, but also for various fields of application (cf. [ SC98 ]). In practice, there exist many types of scenarios where a plausible presentation of moving sound sources is of relevance, e.g. when pedestrians participate in traffic situations. Several subjects and applications will be discussed in the following.

Within the automotive industry, in-car and exterior driving simulators have become important tools during the last two decades. The adequate simulation of complex scenarios as well as virtual prototyping have become more and more important not only for vehicles with internal combustion engines (ICE-vehicles), but also for the research and development of hybrid or electrical vehicle concepts (HEV/BEV). Here, the investigation of perceptual aspects with respect to pedestrian safety in traffic situations gains importance. Such vehicles are less detectable for pedestrians and thus potentially increase the risk of accidents due to reduced powertrain noise [ Ker06, KF08, Zel09, RR09 ].

Recently, new regulations and requirements about the exterior noise of road vehicles have been discussed and issued [ NHT11, NHT13, UNE13, Gov10 ]. In the near future, this surely will have a high influence on the development work of new vehicle fleets, like e.g. the integration of exterior sound warning devices [ UNE10 ]. A virtual environment generator, which adequately features the auralization of moving objects, could reduce the need for expensive prototype vehicles or facilitate the development process, e.g. in case of attributes like exterior noise legalization, sound quality assessment and brand sound design [ BG03, BK12b, BK12a ].

In civil engineering, the investigation and avoidance of annoyance and noise pollution effects caused by traffic noise plays an important role [ SKG02 ]: An adequate auralization of non-stationary sound sources in the context of traffic scenarios can be used in order to create soundscapes of urban environments for psychoacoustic studies. Here, the usage of real-world recordings can be complicated, elaborate and - moreover - insufficient. A rendering engine, which allows a flexible control and parameter setting of the synthesized scenarios, can be a powerful tool within this subject.

Moving objects can be a valuable extension to HMI-systems and could therefore serve as an additional information carrier in addition to other modalities. In this context, applications within e.g. auditory assistance and guidance tasks, warning signals in vehicle navigation and in driver assistance systems could be possible. It is referred to [ Dür01 ] for a detailed investigation on the design of auditory displays by using stationary sound sources. Furthermore, an overview of the topic and a discussion on various aspects is given in [ CV11 ].

Besides, the integration of moving sound sources into a toolbox for psychoacoustic research seems valuable. Moreover, this toolbox could be utilized within audiology for the development of new screening tests or within therapy. Finally, in case of the composition of computer music, in gaming and sound installations as well as in cinematography moving sources can be an extension to already existing means of expression [ Cho77, PMBM08 ].

As described in the previous section, an integration of the relevant cues for distance perception of moving sound sources into an auditory virtual environment can be useful in various fields of research and development. In the following, it is described how to integrate the relevant cues adequately into a 3-D audio system.

The integration of moving objects has a strong effect on the system design and architecture of such an auditory virtual environment generator. In comparison with AVEs, which only feature stationary sources, the employed signal processing algorithms and sound field models become even more complex and computationally expensive (cf. [ Dür01, vK08, Str00 ]). Not all architectures of virtual environment generating systems are capable of handling moving sound sources. This holds true especially for systems applying measured or pre-simulated head-related impulse responses of the complete room (e.g. the BRS-System [ MFT99 ]).

Building a physically appropriate model for auralizing moving sound sources in the context of Wave Field Synthesis-Systems (WFS) has been investigated in [ AS08a, AS08b, FGKS07 ]. However, the realization of such a model in a real-time system might be too elaborate for many applications and it might be advantageous to consider only specific cues of the moving sound source.

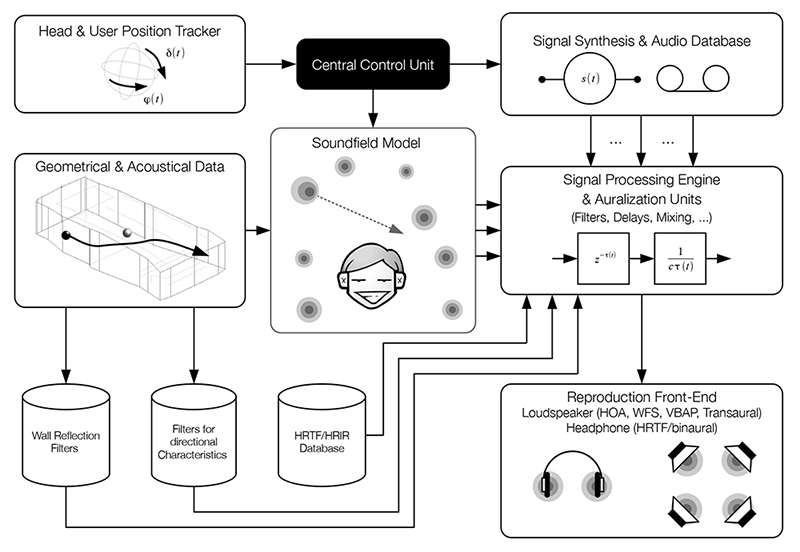

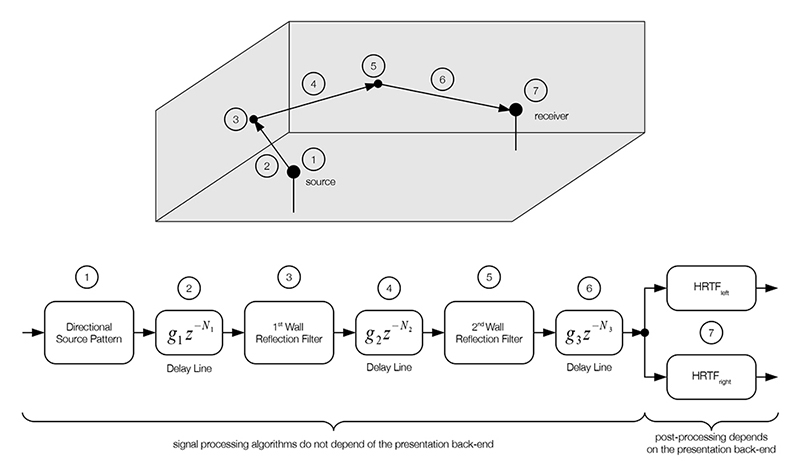

Figure 13 shows a typical structure of the system architecture of an auditory virtual environment generator.The geometrical and acoustical data of the room is being used to calculate the sound field model at the user's actual position in the virtual environment appropriately. In the signal processing engine a convolution of the audio data with the respective HRTFs for the direct sound and each reflection needs to be calculated. Applying additional filters and propagation delays allows auralizing the direct sound and the reflections in a manner as it would be in the respective real environment. The auralization can be performed binaurally via headphones [ Bla97, Str00 ] or via loudspeakers [ BR09, Bla08 ].

For loudspeaker-based solutions, Higher Order Ambisonics (HOA), trans-aural methods with dynamic crosstalk cancellation, Vector-based Amplitude Panning (VBAP) or near-speaker panning (cf. [ SH07 ]) can be used. Nowadays Wave-Field- Synthesis systems can be applied as well in order to auralize complex scenes [ Ber88 ] and several investigations with respect to the auralization of moving objects have been conducted [ AS08a, FGKS07 ].

With regard to the auditory virtual environment, mainly the sound field model and the signal processing engine need to be modified and adapted when integrating moving sound sources into the virtual environment generating system. For further discussion on the system architecture and structures of AVEs the reader is referred e.g. to [ BLSS00, Nov05, Len07, SB00, Tsi01, Tsi98, Vor08 ].

When enhancing the existing sound field model of virtual auditory environment generators, it has to be carefully investigated which of the cues of moving sound sources need to be adapted in order to give a plausible presentation to the listener. The results of the psychoacoustic experiments have shown that not all of the cues (cf. Table 1) have the same impact on perception. Depending on computation power and the requested quality not all of the cues need to be considered and thus allow to be rendered less precisely.

A physical exact sound field model is not always appropriate for the simulation. The replacement or extension with a sound field model - which has been created on the basis of psychoacoustic insights - can possibly bring some benefits: reduced computation load, improved scalability of the employed auralization units and in consequence less hardware-effort. These benefits are extremely relevant when the simulation of complex scenarios in real-time is required. However, when implementing a tool for further psychoacoustic research on moving sound sources, then a physically exact model needs to be used as a first step. As a second step, the exact model could then be utilized in order to create adapted sound field models according to psychoacoustic effects.

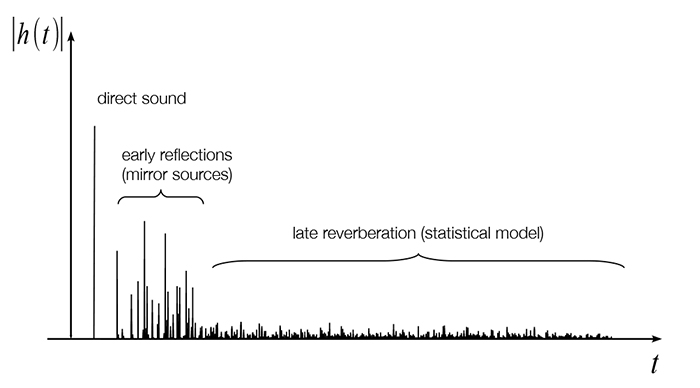

In order to create a plausible presentation of the virtual world and to care for (head) movements of the listener an update of the sound field model needs to be performed in real-time. Figure 14 shows an example of a room impulse response that can be divided into three sections: direct sound, early reflections and late reverberation (cf. [ JCW97, Vor08 ]). In case of a relative movement between source and receiver, all of these sections become time-variant and thus are affected by pitch changes due tothe Doppler effect (cf. [ Str00 ]). Both for the direct sound and the early reflections the incidence direction, the change in sound pressure level due to the distance law and the propagation delays need to be updated in real-time [ BLSS00 ]. Furthermore, the pattern of the reflections depends on the source and listener position due to room dimensions or obstacles inside of the simulated environment. Figure 6.3 shows the 'time-variant reflectrogram' of a simulated room impulse response based on a rectangular room (Figure 6.3) with a moving source and stationary receiver as an example.

The time-variant convolution-integral is described by the following equation:

(1)

(1)

with: s(t) := input signal h(τ,t) := time-variant impulse response g(t) := output signal Consequently, the relation between time-variant impulse response and the time-variant transfer-function can be described by the Fourier-Transform (cf. [ Lin04 ]):

(2)

(2)

(3)

(3)

For more details in terms of time-variant systems, the reader is referred to [ Lin04, Rup93 ] in the subject of wireless communication systems namely Doppler/fading channels. The signal block diagram of an auralization unit in order to render early reflections - based on the image source model - is shown in Figure 15. Moreover, the block diagram takes into account the directional pattern of the source, the time delays of the propagation paths, wall reflection filters and binaural rendering by using head related transfer functions (cf. [ Str00 ]).

In order to simplify the sound field model, it can be considered to apply only a static rendering of some sound field parameters. For example, only the amplitudes and probably the incidence directions of the early reflections are updated, but the sound field is not completely re-calculated in each time frame. This strategy results in a decreased computation effort and a reduction of the complexity of the implementation.

Additionally, a model-based adaptation of the diffuse sound field resp. the late reflections within the room impulse response might be considered. Strauss [ Str00 ] investigated the influence of a moving sound source on the statistical properties of late reverberation (e.g. average Doppler frequency scaling factors of the late reverberation tail). Here, the statistical model can be used in order to approximate the properties of the late reflections of the room impulse response by means of suitable reverb- and convolution-algorithms (cf. [ Gar94, MT99, Str00, Wef08, Zöl05, Zöl11 ]).

One further step can be taken to simplify the model: It might be appropriate for many scenarios to modify only the direct sound depending on the movement of the sound sources and to remain the reflections unchanged. Moreover, it is possible to render only the reflections which are relevant in order to create a proper distance percept (cf. [ Dür01, Dür00 ]). One further strategy could be to apply an amplitude threshold and to render only the image sources that exceed the threshold. However, trade- offs like an altered spatial impression of the rendered environment due to omitted image sources have to be taken into account (cf. [ Dür00 ]).

As incidence direction, amplitude and pitch of the reflections have minor impact on perception, this seems to be acceptable for a plausible representation of the real world. However, it can be complicated to find simplified strategies which allow a perceptually robust and plausible presentation of moving objects, due to the time-variant properties of the regarded systems.

Figure 14. Room Impulse Response (RIR) with direct sound, early reflections (image sources) and the late reverberation tail (which can be described by a statistical model): Each section of the room impulse response is time-variant due to the relative movement of the source/receiver and furthermore is more or less affected by Doppler shift. The absolute values of the impulse response vector h(t) have been plotted versus time in order to give a more clear illustration of the various sections.

As mentioned in the previous section, the sound field model has to be extended with regard to time-variant systems, as soon as movements of the source and the receiver in the virtual environment need to be auralized (cf. [ Lin04, Rup93 ]). As soon as the filters and parameters for direct sound and reflections are handled frame-based, an adequate interpolation between the different time frames needs to be applied. The temporal variation of the amplitude, the change of the interaural parameters and the pitch changes need to be interpolated. Several methods, in particular with respect to HRTF/HRIR interpolation algorithms have been developed in the past and can be applied here (e.g. [ FBD02, HP00, LB00, KD08 ]). The majority of these methods have already been implemented in state-of-the-art virtual environment generating systems. Even though the parameters need to be carefully adjusted no extra effort has to be taken here in the implementation.

Different methods can be applied in order to auralize pitch changes (cf. [ Zöl05, Zöl11 ]): On the one hand frequency-domain methods directly adapt the pitch according to the movement of the listener and the sound source. These methods are easy to implement and are part of a standard repertoire of signal processing algorithms. The required computation power is low and thus the method can be easily used for a plausible auralization. One disadvantage of frequency domain-based pitch shift algorithms is that these do not correctly modify the phase information of the original signal. Moreover, the variation of the delay due to the modified distance between sound source and receiver is not modeled appropriately. However, pitch shifting by means of frequency-domain algorithms might be interesting for psychoacoustic research as it offers the possibility to control the signal-duration and the frequency-scaling factors independently from each other.

Time-domain based methods can be used which modify the length of the audio signal and by that indirectly modify pitch. When appropriately realized, these methods are a correct representation of the real world, at least from a physical point of view. However, accounting for the modified sampling rate fractionally addressed delay lines need to be applied (cf. [ Smi10, Roc00 ]) and non-harmonic distortions due to interpolation algorithms (jitter) need to be avoided (cf. [ Bor08, Fra11b, Fra11a, Smi10, Str00, Zöl05 ]). Principally, both frequency-based and time-based methods have to be applied separately to the direct sound and to each of the reflections. However, as has already been described above, it might also be possible to modify the direct sound only and to let the reflections remain unchanged.

Figure 15. Block diagram: Example of a single auralization unit of an early reflection (2nd order) based on the image source model. Moreover, the directional characteristics of the source is taken into account. The upper image shows the corresponding elements of the image source resp. sound field model, namely the directional characteristics of the source (#1), the propagation paths (#2, #4 and #6), the wall reflections (#3 and #5) and the receiver (#7) (after [ Str00 ]).

Figure 16. Example: Reflectrogram of an normalized time-variant room impulse response of an rectangular room resulting by a moving point source with constant speed (vx = 1 m/s) and a stationary receiver: The source approaches the receiver on a straight line and arrives after 15 seconds. Here, only the direct sound and the early reflections (image sources) are considered. Furthermore, Doppler shift was not taken into account (low velocity of the source). Moreover, the level of each element of the impulse response vectors has been calculated (LhRIR (τ, t) = 20log10(|hRIR,norm(t)|)) in order to show the relation and progression of the direct sound and the reflection patterns corresponding to the simulated image sources. The x-axis shows the time vector and the y-axis shows the sample-index of the impulse response, while the z-axis shows the colormap corresponding to the audio level LhRIR [dBFS] of the reflections.

![Example: Reflectrogram of an normalized time-variant room impulse response of an rectangular room resulting by a moving point source with constant speed (vx = 1 m/s) and a stationary receiver: The source approaches the receiver on a straight line and arrives after 15 seconds. Here, only the direct sound and the early reflections (image sources) are considered. Furthermore, Doppler shift was not taken into account (low velocity of the source). Moreover, the level of each element of the impulse response vectors has been calculated (LhRIR(τ, t) = 20log10(|hRIR,norm(t)|)) in order to show the relation and progression of the direct sound and the reflection patterns corresponding to the simulated image sources. The x-axis shows the time vector and the y-axis shows the sample-index of the impulse response, while the z-axis shows the colormap corresponding to the audio level LhRIR [dBFS] of the reflections.](figure16.jpg)

A great variety of technical solutions and virtual environment generating systems exists. Depending on the sound field model the complexity of an adequate consideration of moving sound sources in the system architecture varies significantly. In the following only a selection of a few AVE-systems shall be named as examples:

One commercially oriented tool for spatial modeling of sound sources is the Spatial Audio Workstation by IOSONO [1] [ MSPB10 ]. In combination with the specific Spatial Audio Processor [ IOS13b ] it supports headphone-based and loudspeaker-based auralization. So far, this system is capable of simulating moving sources under free field conditions but without featuring the simulation of reflections/reverberation resp. of time-variant room impulse responses (according to [ IOS13a ]).

As a commercial product designed for applications in the automotive industry the Bruel&Kjaer NVH Vehicle Simulator and PULSE - Exterior Sound Simulator (ESS) [2] are interesting tools in order to simulate and auralize scenarios containing moving vehicles (cf. [ BK12b, BK12a ]). Here, it is possible to create scenarios from the perspective of a pedestrian who is able to move freely through traffic situations, e.g. in order to evaluate the exterior warning sounds of electric vehicles. In addition, it is possible to create scenarios from the driver's perspective. In this case, passing vehicles can be auralized and realistic traffic scenarios can be created; similar to the scenarios which have been investigated in the psychoacoustic experiments within this article.

The freely available SoundScapeRenderer [ AGS08, GAM07 ] is a tool which is capable of auralizing sound fields for different reproduction set-ups. Both loudspeaker-based and headphone-based systems can be supported. An enhancement of the SoundScapeRenderer towards moving sound sources is comparably easy as the code has been published by the development team [3]. However, the SoundScapeRenderer is not capable of simulating reflections in the virtual environment. Thus possibly a simplified sound field model can be applied which only modifies the direct sound.

Furthermore, a freely available toolbox called AudioProcessingFramework - which has been partly created by the developers of the SoundScapeRenderer - could be extended by suitable algorithms in order to simulate moving objects (cf. [ GS12 ]). Then, the AudioProcessingFramework could serve as a basis for the development of new 3-D audio applications featuring moving objects.

Two experiments have been conducted in order to understand distance perception and velocity perception of different types of moving sound sources. The sound sources were moved on a rectilinear path and passed the listener at a specific distance. The subjects were asked to determine both the velocity of the object (cf. Experiment 1) and the minimal distance from the listener (cf. Experiment 2).

The results of the first experiment can be summarized as follows: The perceived velocity strongly depends on the minimum distance of the sound source's trajectory. The same trend can be observed for both vehicle types: An increase of distance results in a decrease of the perceived velocity. The strength of this effect seems to be signal-depending. In this experiment the observed effect is stronger in case of the motorcycle than for the passenger car. Binaural effects which have been proven to be significant for the passenger car (cf. [ PS09 ]) can be observed for the motorcycle as well. However, significance can only be proved for some of the stimuli

In Experiment 2 the parameters relevant for distance perception of moving sound sources have been analyzed. Both for the motorcycle and for the passenger car the main parameter for the determination of distance is the maximum sound pressure level at the listener's position. With regard to the perceived egocentric distance, it has been shown that the dependency on the velocity of the object is much lower for the motorcycle than for the passenger car. A reason for this is that the passenger car's sound is much stronger affected by the true velocity (e.g. by tire noise). A regression and a correlation analysis have shown that the sound pressure level is the main parameter for distance estimation in an environment with low reverberation.

On the one hand, human distance perception is not independent of velocity. On the other hand it cannot be deduced directly from the velocity. The results of experiment 2 show that the distance between a moving object and the listener can be controlled by a simple adaptation of the sound pressure level. However, in [ PS09 ] a significant decrease in plausibility is obtained by a manipulation of the sound pressure level compared to a scenario where other dynamic and static cues are adapted as well.

Different applications have been identified and discussed which can benefit from an adequate understanding of the perception and presentation of moving sound sources. A variety of possible applications exists in industrial applications, e.g. the automotive industry, civil engineering and human-machine interfaces. Furthermore, such systems can be applied within psychoacoustic research and audiology. The results of these experiments are useful for many applications in the area of virtual auditory environments. Distance perception of moving sound sources plays an important role in the creation of virtual environments in which the listener and the sound-radiating objects can move freely [ Str00, Str98b, Str98a ]. Driving simulators can serve as a typical example, where a proper auralization of moving sound sources may be required.

Finally, it has been described in which way systems that generate virtual auditory environments need to be extended and which elements in the system architectures need to be modified. It has been shown that, depending on the required accuracy, already existing virtual auditory environment generators can be easily adapted to handle moving sound sources.

One of the biggest challenges is the creation of plausible and perceptually robust rendering strategies. For instance, one approach could be to render the most important cues with higher precision and to handle the remaining cues with less priority. As a consequence, it might be possible to reduce computational effort. However, further psychoacoustic research needs to be done in this subject in order to validate such strategies.

The recordings and experiments have been conducted within several diploma theses at the Cologne University of Applied Sciences. The authors would like to thank Nicolas Ritschel who contributed to this paper by performing the psychoacoustic experiments based on the recordings of the motorcycle within his diploma thesis. Moreover, the authors would like to thank Holger Strauss who clarified our questions on the sound field model via e-mail correspondence. Last but not least, the authors would like to thank the anonymous reviewers for their comments and ideas in improving the article.

[AGS08] The SoundScape Renderer: A Unified Spatial Audio Reproduction Framework for Arbitrary Rendering Methods 124th AES Convention, Amsterdam, The Netherlands, Audio Engineering Society, paper no. 7330, 2008.

[AS08a] Reproduction of Moving Virtual Sound Sources with Special Attention to the Doppler Effect 124th AES Convention, Amsterdam, The Netherlands, Audio Engineering Society, paper no. 7363, 2008.

[AS08b] Reproduction of Virtual Sound Sources Moving at Supersonic Speeds in Wave Field Synthesis 125th AES Convention, Amsterdam, The Netherlands, Audio Engineering Society, paper no. 7557, 2008.

[Ber88] A Holographic Approach to Acoustic Control Journal of the Audio Engineering Society, 1988 12 977—995 1549-4950

[Beu07] Wahrscheinlichkeitsrechnung und Statistik mit MATLAB: Anwendungsorientierte Einführung für Ingenieure und Naturwissenschaftler Springer Verlag GmbH, Berlin 2007 978-3-540-72155-0

[BG03] Realistic 3D Sound Simulation in the VIRTTEX Driving Simulator Driving Simulator Conferences (DSC) North America Proceedings, Dearborn, Michigan, USA, University of IOWA, 2003.

[BK12a] Product Information: DTS Exterior Sound Simulator - Type 8601-T http://www.bksv.com/products/pulseanalyzerplatform/pulsesolutionsoverview/acousticapplications/nvhvehiclesimulator.aspx 2012 last access on: December 30th, 2012.

[BK12b] Product Information: NVH Vehicle Simulator - Type 3644 http://www.bksv.com/products/pulseanalyzerplatform/pulsesolutionsoverview/acousticapplications/nvhvehiclesimulator.aspx 2012 last access on: December 30th, 2012.

[Bla97] Spatial Hearing - The Psychophysics of Human Sound Localization Revised edition, MIT Press, Cambridge, Massachusetts 1997 0-262-02413-6

[Bla08] 3-D Lautsprecher-Wiedergabemethoden Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, pp. 25—26 2008.

[BLSS00] An Interactive Virtual-Environment Generator for Psychoacoustic Research. I: Architecture and Implementation Acta Acustica united with Acustica, 2000 1 94—102 1610-1928

[Bor08] Efficient Asynchronous Resampling of Audio Signals for Spatial Rendering of Mirror Image Sources Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, pp. 903—904 2008.

[BR09] Providing Surround Sound with Loudspeakers: A Synopsis of Current Methods Archives of Acoustics, 2009 1 5—18 0137-5075

[Cho77] The Simulation of Moving Sound Sources Computer Music Journal, 1977 3 48—52 0148-9267

[Col62] Failure to Localize the Source Distance of an Unfamiliar Sound Journal of the Acoustical Society of America, 1962 3 345—346 0001-4966

[Col68] Dual Role of Frequency Spectrum in Determination of Auditory Distance Journal of the Acoustical Society of America, 1968 2 631—632 0001-4966

[CV11] From Whereware to Whence- and Whitherware: Augmented Audio Reality for Position-Aware Services IEEE International Symposium on VR Innovation (ISVRI), 2011 pp. 273—280 978-1-4577-0055-2

[DE12] Electroacoustics - Sound level meters - Part 1: Specifications (IEC 29/724/CDV:2010) - German version prEN 61672-1:2010 Deutsches Institut für Normung e. V., Beuth Verlag Berlin 2012.

[DIN89] Sound insulation in buildings; requirements and testing Deutsches Institut für Normung e. V., Beuth Verlag Berlin 1989.

[Dür00] Gestaltung von Reflexionen in Auditory Displays Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, 2000.

[Dür01] Untersuchungen zum Entwurf von Auditory Displays/Investigations Into the Design of Auditory Displays Ruhr-University Bochum, Institute of Communication Acoustics (ICA), 2001.

[FBD02] Efficient HRTF Interpolation in 3D Moving Sound AES 22nd International Conference: Virtual, Synthetic, and Entertainment Audio, Audio Engineering Society, paper no. 000232, 2002.

[FGKS07] Reproduction of Moving Sound Sources by Wave Field Synthesis - An Analysis of Artifacts AES 32nd International Conference: DSP for Loudspeakers, Audio Engineering Society, paper no. 10, 2007.

[Fra11a] Efficient Algorithms for Arbitrary Sample Rate Conversion with Application to Wave Field Synthesis Technical University Ilmenau, Faculty of Electrical Engineering and Information Technology, 2011.

[Fra11b] Performance Evaluation of Algorithms for Arbitrary Sample Rate Conversion 131st AES Convention, New York, USA, Audio Engineering Society, paper no. 8552 , 2011.

[GAM07] The SoundScape Renderer: A Versatile Framework for Spatial Audio Reproduction Proceedings of 1st DEGA Symposium on Wave Field Synthesis, Ilmenau, Germany, 2007.

[Gar94] Efficient Convolution without Input-Output Delay 97th AES Convention, Audio Engineering Society, paper no. 3897, 1994.

[Gol7] Wahrnehmungspsychologie: Der Grundkurs (Sensation and perception) 7th edition, Spektrum Akademischer Verlag, Berlin 2007 978-3-8274-1766-4

[Gov10] Pedestrian Safety Enhancement Act of 2010 111th Congress of the United States of Americ, 2nd Session, Section 3: Minimum Sound Requirement for Motor Vehicles, http://www.govtrack.us/congress/billtext.xpd?bill=s111-841, 2010 841 Government of the United States of America last access on: January 6th 2013.

[GS12] An Open-Source C++ Framework for Multithreaded Realtime Multichannel Audio Applications Proceedings of the Linux Audio Conference 2012, 2012.

[HEK09] Statistik: Lehr- und Handbuch der angewandten Statistik 15th edition, Oldenbourg Wissenschaftsverlag, München 2009 978-3-486-59028-9

[Hel96] Auditory Perception of Approaching Sound Sources, and Time-of-arrival Judgements Ruhr-University Bochum, Faculty of Psychology, Germany, 1996.

[Hel99] Auditive Distanzlokalisation und der Effekt von Kopfbewegungen Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, 1999.

[HP00] Vergleich verschiedener Interpolationsalgorithmen für Außenohrübertragungsfunktionen in dynamischen auditiven Umgebungen Fortschritte der Akustik, DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, 2000.

[IOS13a] Operation Manual: Spatial Audio Workstation 2 (Version 2.1) http://iosono-sound.com/assets/files/press/Products/Documents/Spatial%20Audio%20Workstation/Spatial%20Audio%20Workstation%20Operation%20Manual%202.1.pdf, 2013 last access on: February 20th, 2013.

[IOS13b] Product Information: Spatial Audio Workstation 2 (Version 2.1) http://www.iosono-sound.com/assets/files/IOSONO_IPC100_brochure.pdf, last access on: February 20th, 2013.

[JCW97] Analysis and Synthesis of Room Reverberation Based on a Statistical Time-Frequency Model 103rd AES Convention, Audio Engineering Society, paper no. 4629, 1997.

[JM86] Graphic Scaling of Quantitative Terms SMPTE journal, Society of Motion Picture and Television Engineers, 1986 11 1166—1171 0036-1682

[KD08] A New HRTF Interpolation Approach for Fast Synthesis of Dynamic Environmental Interaction Journal of the Audio Engineering Society, 2008 1/2 28—35 1549-4950

[Ker06] Das Fahrzeugaußengeräusch im urbanen Verkehr und seine Bedeutung für die Interaktion Fußgänger - Fahrzeug ATZ Automobiltechnische Zeitschrift, 2006 7-8 602—609 0001-2785

[KF08] Prediction of Perceptibility of Vehicle Exterior Noise in Background Noise DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, pp. 623—624 2008.

[LB00] Fidelity of Three-dimensional Sound Reproduction Using a Virtual Auditory Display Journal of the Acoustical Society of America, 2000 1 528—537 0001-4966

[Len07] Binaural Technology for Virtual Reality Technical University Aachen, Institute of Technical Acoustics, 2007.

[Lin04] 5: Übertragungskanäle, Kanalmodelle Informationsübertragung: Grundlagen der Kommunikationstechnik, pp 251—266 Springer Verlag, Berlin Heidelberg 2004 3-540-21400-3

[LW99] Correlational Analysis of Acoustic Cues for the Discrimination of Auditory Motion Journal of the Acoustical Society of America, 1999 2 919—928 0001-4966

[MBL89] Effects of Room Reflectance and Background Noise on Perceived Auditory Distance Perception, 1989 3 403—416 0301-0066

[MFT99] Binaural Room Scanning - A New Tool for Acoustic and Psychoacoustic Research Journal of the Acoustical Society of America, 1999 2 1343—1344 0001-4966

[Möl00] Assessment and Prediction of Speech Quality in Telecommunications Kluwer Academic Publ., Boston MA 2000 0-7923-7894-6

[MSPB10] Design and Implementation of an Interactive Room Simulation for Wave Field Synthesis 40th AES Conference, Tokyo, Audio Engineering Society, paper no. 7-5, 2010.

[MT99] Low-Latency Convolution for Real-Time Applications AES 16th International Conference on Spatial Sound Reproduction, Audio Engineering Society, paper no. 16-040, 1999.

[NHT11] NHTSA Studying Environmental Impact of 'Quieter Cars' http://www.nhtsa.gov/About+NHTSA/Press+Releases/2011/NHTSA+Studying+Environmental+Impact+of+%27Quieter+Cars%27, 2011 last access on: February 20th, 2013.

[NHT13] Notice of Intent to Prepare an Environmental Assessment for Pedestrian Safety Enhancement Act of 2010 Rulemaking (Docket No. NHTSA-2011-0100) www.nhtsa.gov/staticfiles/rulemaking/pdf/QuietCar-noi-draft.pdf, 2013 last access on: February 20th, 2013.

[Nov05] 11: Auditory Virtual Environments Communication Acoustics, 277—298 Springer Verlag Berlin Heidelberg 2005 J. Blauert (Editor) 978-3-540-22162-3

[PMBM08] Spatial Sound Rendering in MAX/MSP with ViMiC Proceedings of the International Computer Music Conference (ICMC), Belfast, Northern Ireland, International Computer Music Association (ICMA), 2008.

[PS09] Investigations Into the Velocity and Distance Perception of Moving Sound Sources Acta-Acustica united with Acustica, 2009 4 696—706 1610-1928

[Roc00] Fractionally-addressed delay lines IEEE Transactions on Speech and Audio Processing, 2000 6 717—727 1063-6676

[RR09] Are hybrid cars too quiet? Journal of the Acoustical Society of America, 2009 4 2744 0001-4966

[Rup93] 13: Lineare zeitvariante Übertragungssysteme Signale und Übertragungssysteme. Modelle und Verfahren für die Informationstechnik pp. 251—266 Springer Verlag, Berlin 1993 3-540-56853-0

[SB00] Zur Architektur interaktiver Systeme zur Erzeugung virtueller Umgebungen Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, 2000.

[SC98] Applications of Virtual Auditory Displays Proceedings of the 20th International Conference of the IEEE Engineering in Biology and Medicine Society, pp. 1105—1108 1998 1094-687X

[SH07] Perceptual Equalization in Near-speaker Panning Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, pp. 375—376, 2007.

[SKG02] Simulation des Außengeräusches in Innenstadtstraßen Fortschritte der Akustik - DAGA Tagungsband Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany 166—167 2002.

[SL93] Auditory Distance Perception by Translating Observers Proceedings of the IEEE Symposium on Research Frontiers in Virtual Reality, pp. 92—99 1993 0-8186-4910-0

[Smi10] Physical Audio Signal Processing W3K Publishing 2010 978-0-9745607-2-4 Online available at https://ccrma.stanford.edu/~jos/pasp/, Last accessed November 6th, 2013.

[SP07] Psychoakustische Experimente zur Untersuchung der Wahrnehmung Doppler-behafteter Signale Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, 377—378, 2007.

[SS73] Head movement does not facilitate perception of the distance of a source of sound American Journal of Psychology, 1973 1 151—159 0002-9556

[Str98a] Implementing Doppler Shifts for Virtual Auditory Environments 104th AES Convention, Audio Engineering Society, paper no. 4687, 1998.

[Str98b] Realzeitsimulation von zeitvarianten Schallfeldern in virtuellen auditiven Umgebungen Fortschritte der Akustik - DAGA Tagungsband Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, 542—543 1998.

[Str00] Simulation instationärer Schallfelder für auditive virtuelle Umgebungen VDI-Verlag Düsseldorf Ruhr-University Bochum, Institute of Communication Acoustics (IKA), 2000 3-18-365210-2

[Tsi98] Simulation of high quality sound fields for interactive graphics applications (in french) Université Joseph Fourier Grenoble, Sciences et Géographie, U.F.R. D'Informatique et de Mathématiques Applicées, 1998.

[Tsi01] A versatile Software Architecture for virtual Audio Simulations Proceedings of the 2001 International Conference on Auditory Display (ICAD), Espoo - Finland, 2001 01-38—01-43 Available online at http://www.acoustics.hut.fi/icad2001/proceedings/index.htm, last accessed November 6th, 2013, 951-22-5520-0

[UNE10] Press Release: UNECE Works on Standard for Sound Device to Equip Silent Electric Vehicles http://www.unece.org/press/pr2010/10trans_p12.html 2010, last access on: January 6th 2013.

[UNE13] World Forum for Harmonization of Vehicle Regulations (WP.29) - Working Party on Noise (GRB) - Informal group on Quiet Road Transport Vehicles (QRTV) http://www.unece.org/ru/trans/main/wp29/meeting_docs_grb.html 2013, last accessed January 6th, 2013.

[vB49] The Moon Illusion and Similar Auditory Phenomena American Journal of Psychology, 1949 4 540—552 0002-9556

[vK08] Ein hybrides Verfahren zur Berechnung von Schallfeldern infolge bewegter Quellen Technische Universität Hamburg-Harburg - Institut für Modellierung und Berechnung, 2008.

[Vor08] Auralization: Fundamentals of Acoustics Springer Verlag, Berlin 2008 978-3-540-48829-3

[Wef08] Optimierung der Filtersegmentierung für die Echtzeitfaltung Fortschritte der Akustik - DAGA Tagungsband, Deutsche Gesellschaft für Akustik (DEGA e.V.), Berlin, Germany, pp. 901—902 2008.

[Zah02] Assessing auditory distance perception using virtual acoustics Journal of the Acoustical Society of America, 2002 4 1832—1846 0001-4966

[ZBB05] Auditory Distance Perception in Humans: A Summary of Past and Present Research Acta-Acustica united with Acustica, 2005 3 409—420 1610-1928

[Zel09] P. Zeller 14.3: Außengeräusch - Akustische Wahrnehmbarkeit pp. 273—279 Vieweg + Teubner, GVW Fachverlage Handbuch der Fahrzeugakustik: Grundlagen, Auslegung, Berechnung, Versuch, 2009.

[Zöl05] Digitale Audiosignalverarbeitung 3rd edition, Teubner, Stuttgart 2005 3-519-26180-4

[Zöl12] DAFX - Digital Audio Effects 2nd edition, Wiley, New York 2011 978-0-470-66599-2

Volltext ¶

-

Volltext als PDF

(

Größe:

2.7 MB

)

Volltext als PDF

(

Größe:

2.7 MB

)

Lizenz ¶

Jedermann darf dieses Werk unter den Bedingungen der Digital Peer Publishing Lizenz elektronisch übermitteln und zum Download bereitstellen. Der Lizenztext ist im Internet unter der Adresse http://www.dipp.nrw.de/lizenzen/dppl/dppl/DPPL_v2_de_06-2004.html abrufbar.

Empfohlene Zitierweise ¶

Christian Störig, and Christoph Pörschmann, Investigations into Velocity and Distance Perception Based on Different Types of Moving Sound Sources with Respect to Auditory Virtual Environments. JVRB - Journal of Virtual Reality and Broadcasting, 10(2013), no. 4. (urn:nbn:de:0009-6-38009)

Bitte geben Sie beim Zitieren dieses Artikels die exakte URL und das Datum Ihres letzten Besuchs bei dieser Online-Adresse an.