No section

3-D Audio in Mobile Communication Devices:

Effects of Self-Created and External Sounds on Presence

in Auditory Virtual Environments

urn:nbn:de:0009-6-26798

Abstract

This article describes a series of experiments which were carried out to measure the sense of presence in auditory virtual environments.

Within the study a comparison of self-created signals to signals created by the surrounding environment is drawn. Furthermore, it is investigated if the room characteristics of the simulated environment have consequences on the perception of presence during vocalization or when listening to speech. Finally the experiments give information about the influence of background signals on the sense of presence.

In the experiments subjects rated the degree of perceived presence in an auditory virtual environment on a perceptual scale. It is described which parameters have the most influence on the perception of presence and which ones are of minor influence. The results show that on the one hand an external speaker has more influence on the sense of presence than an adequate presentation of one's own voice. On the other hand both room reflections and adequately presented background signals significantly increase the perceived presence in the virtual environment.

Keywords: Spatial Audio, Virtual Auditory Environments

Subjects: Psychoacoustics, Psychoacoustic Measurement, Room Acoustics, Virtual Environments

Self-created sounds surround us all the time we are acting in a real environment. We have become used to it and are often not aware of the auditory feedback when interacting with the environment. For example, we produce sounds when typing on a keyboard or while walking which helps us to optimize our actions.

A very special form of auditory feedback is provided by the perception of one's own voice. While speaking we hear our own voice and react very sensitively to changes in the sound of the own voice.

Most commonly, virtual auditory environments are designed to simulate perceptually plausible environments, even though they are in general free of the restrictions of the laws of physics and therefore, environments without a real counterpart can be easily generated.

Especially when using the virtual environment as a tool for psychoacoustic research it is required to know if the subjects in the virtual environment behave similarly to the way they would behave in a real environment. Djelani et al. [ DPSB00 ] have shown that localization in the assessed headphone-based virtual auditory environment differs only slightly compared to the real world.

Presence is a combination of involvement and the immersion in a virtual environment. The involvement describes a psychological state of mind experienced as consequence of focusing one's attention on a coherent set of stimuli. The immersion is a psychological state characterized by perceiving oneself to be enveloped by, included in and interacting with an environment that provides a continuous stream of stimuli and experiences [ WS98 ]. According to Sheridan [ She92a, She92b ] virtual presence is achieved when the sensory information generated by a computer and presented with the appropriate methods causes a feeling of being in the virtual environment. Fontaine [ Fon92 ] and Zeltzer [ Zel92 ] describe how the degree of presence perceived in the virtual environment influences the ability of a subject to perform a task in the virtual environment. In general, in a virtual environment offering a higher degree of presence, a task can be performed better and more efficiently. Thus, in most applications involving an auditory virtual environment, it is desirable to achieve a certain amount of immersion and involvement in the virtual environment.

In literature several investigations on measuring the sense of presence have been performed. Different methods can be applied e.g. by asking the subjects to fulfill a task in the virtual environment or by directly asking the subjects how they perceived presence. For an overview on methods and literature on measuring presence please refer to Witmer und Singer [ WS98 ].

When developing a virtual auditory environment system it is required to create a plausible impression of the environment. The design of the environment to be simulated – i.e. the shape of the room, kind and position of the sound sources in the room, etc. – are important for the listener's perception. The subject shall feel both comfortable and present in the virtual environment.

Knowledge about the different parameters that influence presence and about the implications when feeling present in an environment on the actions of a subject is still rare. However, according to Gilkey and Weisenberger [ GW95 ] especially sounds, which serve neither as a symbol nor as a warning, maintain our feeling of being a part of the living world and contribute to our sense of being alive. Thus, it is obvious that self-created sounds may significantly contribute to enhanced presence.

The results presented in this paper are part of a series of studies on virtual auditory environments which were carried out at the Institute of Communication Acoustics at Ruhr-Universität of Bochum. The studies aimed at understanding the influences of different auralization methods on the authenticity and the presence of a virtual auditory environment [ DPSB00, Poer00, Poer01, Pel02 ]. However, the results presented here are new and have not been published elsewhere. They can help to gain information about the influence of self-created sounds on the sense of presence.

The experiments which are described in this paper have been carried out to address the following key questions:

-

Which parameters influence the sense of presence during vocalization? Which ones are most important?

-

In which way do an appropriate presentation of one's own voice and an external speaker contribute to the sense of presence in a virtual environment?

In a previous study it has been investigated in which way an adequate presentation of one's own voice increases the presence in a virtual environment [ Poer01 ]. Analyzing presence during a conversation task it was shown that an adequate presentation of one's own voice enhances the sense of presence in a virtual environment. In the investigation the presentation of the direct sound component and the reflections of one's own voice were varied. The results show that presence in a virtual environment is increased by a plausible perception of the subject's own voice. Both an appropriate presentation of the direct sound (which is normally modified by wearing headphones) and the reflections contribute significantly to the increase in presence.

A similar approach has been taken by Nordahl [ Nor05 ]. He investigated the influence of self-created sounds of footsteps on presence. The results show that a significant increase of presence can be observed by additionally presenting an auditory feedback of the footsteps. However, in the previous studies no attempts were taken to compare the influence of self-created sounds to the influence of externally triggered sounds and their implications on presence.

In another study [ VLVK08 ] the benefits of presenting engine sound when auralizing only auditory or auditory-vibrotactile information are described. The authors conclude from the results that self-motion sounds (e.g. the sound of footsteps, engine sound) represent a specific type of acoustic body-centered feedback in virtual environments. Therefore, the results may contribute to a better understanding of the role of self-representation sounds (sonic self-avatars), in virtual and augmented environments. Further studies have been performed investigating the influence of auditory cues in the perception of self-motion [ KZJH04 ]. In [ RSPCB05 ] the influence of auditory cues on the visually-induced self-motion illusion was investigated. A good overview on different studies on self-produced sounds can be found in [ Bal07 ].

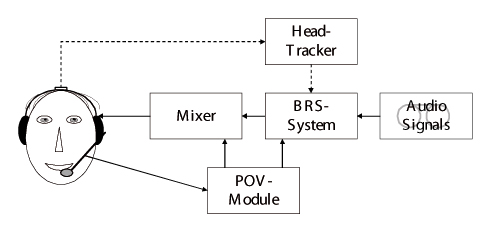

The auditory virtual environment applied in the experiments can be divided in two main components: The BRS-System (Binaural Room Scanning) performs the real-time convolution of the anechoic audio signals with the appropriate binaural room impulse response depending on the subject's orientation.

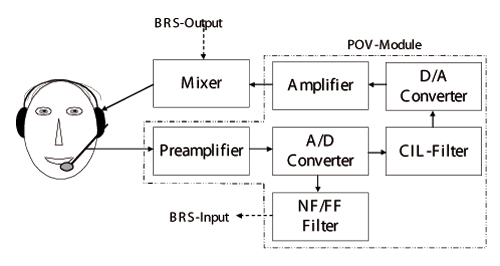

The POV Module (Perception of the Own Voice) is responsible for a plausible presentation of the own voice to the subject. The direct sound component of one's own voice is altered when wearing headphones. Compensating this modification needs to be done with a very low latency and is thus implemented in the POV module. In combination with the BRS-system, the own voice can be presented to a listener as if he/she were in the simulated room.

The BRS system is a jointly developed product by the IRT, Studer Professional Audio AG and the Ruhr-Universität of Bochum. BRS stands for "Binaural Room Scanning". The device is capable of convolving 5 different sound sources with impulse responses of up to 85 ms duration in real time. This auralization system is headphone-based and can work with measured or simulated room-impulse-responses for the acoustical display of rooms. It includes the dynamic convolution of stored binaural room impulse responses, controlled by a head-tracker measuring the head movements in the horizontal plane.

In order to achieve a low latency time only small block sizes are applied for the transformation in the frequency domain. Thus for a room-impulse response of a total length of 4K samples (85 ms at a sampling rate of 48 kHz) a block length of 128 samples was chosen which results in a latency of 5.3 ms period (two blocks). The room database consists of a set of binaural room impulse responses measured at elevations from -45° to 45° and steps of 5° azimuth. Interpolation between the different directional filters is accomplished in the frequency domain. The total update rate depends on the actually used head-tracker model. In this experiment a Polhemus Fastrak has been used which has a latency of about 20 ms at an update rate of 60 Hz, considering the asynchronous communication paths between head-tracker, microcontroller and DSP.

Due to the dynamic update and interpolation of the whole impulse response the BRS system shows a good distance perception and precise localization accuracy despite the fact that non-individual HRTFs are used. In different previous publications more details about the system, an evaluation of the system's performance and the results of listening experiments in which the BRS system was successfully used as auralization hardware for psychoacoustic research have been described [ MFT99, MFT00, HKP99, Pel00 ]. Here it has been shown that considering only the horizontal rotation of the subject's head in combination with non-individual HRTFs is adequate for a perceptual good result. A standard room simulation tool developed at the Institute of Communication Acoustics in Bochum [ LB92, Pom93 ] was used to create the appropriate binaural impulse responses for three different rooms.

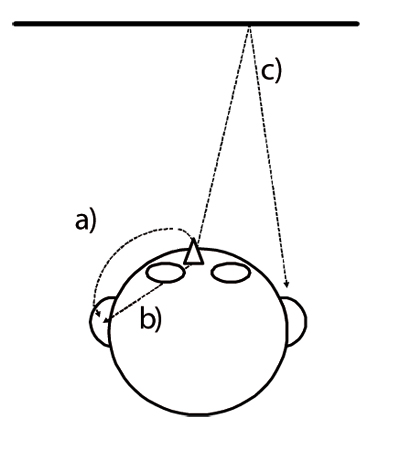

In order to understand the work of the POV Module it is required to give a brief description of the way the own voice is perceived. Hence three components [ LG95 ] influence the perception of the own voice:

-

Sound transmission through the air around the speaker's head to the ear drums (direct air-conducted transmission).

-

Internal sound transmission inside the head through bones and the skull to the cochlea (bone-conducted transmission).

-

Reflections of one's own voice on acoustically relevant surfaces in the surrounding environment (indirect air-conducted transmission).

The absence of one or more of these components commonly leads to an unnatural perception of one's own voice. For example, the absence of reflections can be perceived while speaking in an anechoic chamber which can make people who are not used to such an environment feel uneasy. Due to the reflections the sound of the own voice is totally different between a living room and a cathedral. Wearing ear protectors or headphones also leads to a changed perception. Here, not only the sound transmission around the speaker's head is reduced, but the bone-conducted component is also changed by occluding the ear.

To obtain a plausible perception of the own voice in an auditory virtual environment, the listener must be presented with bone-conducted as well as air-conducted sound. As the headphones applied in the experiments do not have influence on the perception of the bone-conducted sound, it remains unchanged in the simulation and needs therefore not to be considered.

The airborne sound is changed by any kind of headphones. Therefore the external sound transmission has to be reconstructed within the auditory virtual environment in the same way as it would occur without wearing headphones. The influence of the "real" airborne component, which is still present when wearing open headphones, must be considered.

The voice of the speaker is captured by a close-talk microphone (Sennheiser KE4) in the speaker's near-field at a position 40 mm from the lips. The influence of the insertion loss of the headphones is compensated [ Poer00 ] by adequately filtering the recording signal (Correction Insertion Loss Filter) and presenting it via headphones (Sennheiser HD 540) at a correct level. The most critical point for implementing the CIL filter is the low group delay of the hardware on which the filter is realised. Here especially the group delay of the A/D and the D/A converters are of particular importance. A delay of less than 300 μs between the detection of the sound-pressure signal at the mouth microphone and its presentation at the headphones must be obtained in order to maintain the phase information. This delay corresponds to a sound-propagation distance of about 0.1 m, which is in the order of the magnitude of the distance between the mouth reference point (40 mm in front of the lips) and the ear reference point (entrance of the ear canal). The sound pressure in the ear canal caused by the headphone signal interferes with the one caused by the air propagation around the head. Consequently, the phase of the headphone signal is of importance in order to avoid any unwanted superposition and comb-filter effects. The described compensation also comprises the changes caused by the occlusion effect, which manifests itself in the form of a changed sound pressure in the ear canal caused by the headphones [ Poes86 ]. The POV Module is implemented on a Tucker-Davis system which fulfills the requirements concerning a low latency. The reflections of the speaker's voice are calculated within the BRS-system in the same way as the reflections of the other sound sources apart from two exceptions: Firstly direct sound is not considered within the BRS system. Secondly the reflections to be simulated in the virtual environment usually occur in the far-field of the speaker, whereas the microphone signals are recorded at the mouth reference point in the near-field. To correct for this the microphone signals are filtered in a way as if they stemmed from a microphone in the far-field (NF/FF filter). Figure 2 shows the structure of the POV Module.

A detailed description of the POV Module and the measurements required to receive the filter coefficients can be found in previous publications [ Poer00, Poer01 ].

Figure 3 shows the structure of the complete system. The BRS-System receives as input the audio signals of the sound sources (external speaker, background signals) as well as the output signal of the NF/FF filter of the POV Module. The directivity of the speaker is handled within the BRS system.

Figure 3. Structure of the complete auditory virtual environment system as applied in the experiment

This psychoacoustic experiment took place in a small soundproof listening room (3 m x 3.5 m x 3 m) at the CNRS (Centre Nationale de Reserche Scientifique) in Marseille, France. The walls and the ceiling of the room were covered with sound absorbing material (10 cm foamed plastics). The measured reverberation time of the listening room was about 80 ms. The sound pressure level in the room was lower than 30 dB(A). Thus influences of background noise and reflections of one's own voice stemming from the listening room do not have to be considered.

The experiments were controlled by an operator in a contiguous room who had visual contact with the subjects through a window. Furthermore, an intercom was installed between the two rooms in order to give advice to the subjects during the experiment. The subjects sat at a table in the center of the room.

The signals were presented by a set of open Sennheiser HD 540 headphones which can be considered having free equivalent coupling to the ear. The speech signals were recorded by a Sennheiser KE4-microphone 40 mm in front of the lips.

13 subjects aged between 26 and 56 participated in the experiment, three female and ten male. All persons indicated that they had normal hearing ability. Most of them had experience with psychoacoustic experiments, but not with experiments in interactive auditory virtual environments.

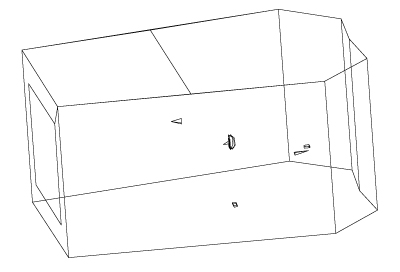

Three different virtual rooms were auralized by the virtual environment during the experiment. The rooms are of the same size and shape, but the wall materials of the virtual room were varied. The room dimensions are 6.4 m x 5.3 m x 2.6 m and the volume amounts to approximately 70 m². Figure 4 shows the shape of the simulated room.

The auralization was performed for three different rooms: An anechoic room (free field conditions) where only direct sound was considered, room No. 1 was a studio room (Figure 4) which was apart from floor and window covered with wood and finally room No. 2 which as well had the shape of a studio room, but which was equipped except from floor and window with plush padding.

Regarding the self-created sounds the own voice was recorded, adequately filtered and presented as described before. Additionally, speech stimuli which were not self-created by the subject were presented. The background sounds which were presented to the subjects during the experiment were prerecorded. Sounds which are common in a bureau as the ticking of a clock, someone typing on a keyboard, the sound of a coffee-machine or the sound of a ventilator were auralized.

Four different sound source positions were simulated in the virtual auditory environment in order to carry out the experiments described in this paper: A position nearly in the centre of the room for the speaker's head (own voice), one position in the back and one to the left for the background signals and one position to the right (external speaker).

The set-up of the experiments allowed switching between the different simulated rooms and the external and self-created sounds in real-time.

The experiment started with an introduction phase, i.e. the scenarios were described and the set-up of the experiment was explained. The subjects were encouraged to perform moderate head movements during the experiment. Short sequences of the different stimuli used in the experiment were presented.

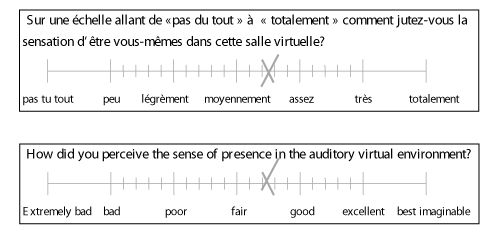

Then the subjects were confronted with the first scenario. They were informed when they should speak and when they should listen.

Before the start of the experiment the expression "presence" (according to [ WS98 ]) as well as the procedure itself were explained to the subjects. At the end of each scenario the subjects were asked to rate presence. The subjects rated presence on a continuous rating scale, which was suggested by Bronwen and Mc Manus [ BM86 ] and Möller [ Moel00 ] as shown in Figure 5. As most of the subjects were native French speakers the information and as well the categories were given in French. The categories can be translated as follows: extremely bad (pas tu tout), bad (peu), poor (légrèment), fair (moyennement), good (assez), excellent (très), best imaginable (totalement). The subjects were asked to give their ratings as intuitively as possible, and not to compare the scores with those of the previous scenarios. The order of the communication scenarios and the one used for the presentation conditions was randomly chosen. This means that systematic errors caused by the order of the scenarios could be avoided. Each condition was presented twice in a randomly chosen order. The experiment took about 30 minutes for each subject.

Figure 5. Scale for rating the perceived presence in the virtual environment (the original scale and the English translation are shown)

With 13 subjects participating in the test a total amount of 26 judgements for each presented condition were collected. The experiment comprised 48 stimuli for each subject.

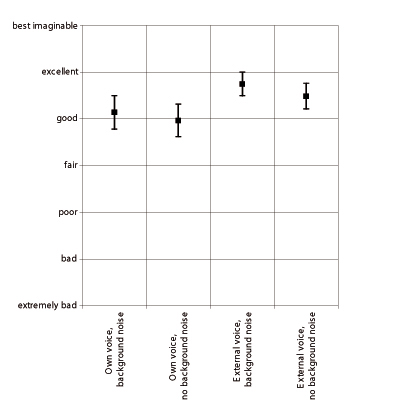

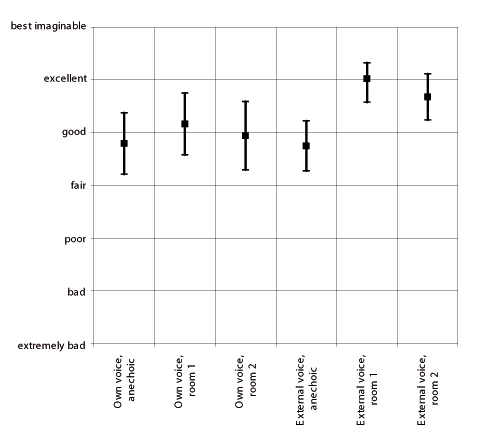

The subjects' scores were analyzed as follows: first by means of a χ²-test it was confirmed that the scores for each condition were Gaussian distributed. Then the average scores and the standard deviations were calculated for each condition. In Figure 6 and Figure 7 the average scores and the 95% confidence intervals are depicted.

Figure 6. Influence of the background noise and the type of the sound source on the perception of one's own voice. The average scores as well as the 95% confidence intervals are shown.

According to Figure 6 presence is rated higher for an external speaker than for one's own voice. A comparison of the conditions by means of a t-test [ HEK91 ] shows that the differences between one's own voice and the external voice have a significant influence on the sense of presence in the virtual environment. The perceived presence is significantly higher for the external speaker than for the own voice (p > 0.99). However, in the experiment most of the persons reported that they perceived the own voice being natural even though some of them did not recognize that the feedback was significantly changed by the presentation of the own voice.

Furthermore, a significant increase in the perceived presence could be observed for the external voice when background stimuli were presented (p > 0.9). For the own voice the same trend could be noticed. However, statistical significance could not be proven here. In general, subjects reported that the presentation of the virtual environment and especially of the background noise was very convincing. Some of them were even not sure if the stimuli originated from real sound sources in the environment or were produced within the virtual environment.

Figure 7. Influence of the type of the room and the type of the sound source (internal / external) on the perception of one's own voice. Both stimuli with and without background noise are considered here. The average scores as well as the 95% confidence intervals are shown.

As shown in Figure 7 differences in the rated presence depending on the presented room can be observed. Especially between the free-field conditions and the acoustically modeled rooms significant differences exist: Compared to the free-field conditions the subjects rated the presence significantly higher for the other two rooms. For the external speaker the differences are statistically significant (p > 0.99). For the own voice the same trend exists, even though the trend could not be statistically proven by means of a t-test. The differences between the free-field conditions and the two rooms are much higher for the external speaker than for one's own voice. This can be explained by the fact that the reflection levels in respect to the direct sound are much higher for an external speaker. No statistical significance was found between the two rooms which were equipped with different wall materials. However, as shown in Figure 7 the trend is the same for the two conditions of external speaker and own voice: The average rates for the presence were for room No. 2 slightly lower than for room No. 1 which might be caused by the fact that the reflections were weaker in room 2 than in room 1.

The results of the experiments presented in this study coincide with the findings of Gilkey and Weisenberger [ GW95 ] and show that an increase of the perceived presence is gained if background sounds are adequately presented.

When speaking in the virtual auditory environment the presence in the virtual environment was lower than when listening to an external speaker. This in principle may becaused by two reasons: Either the presentation of the own voice by the POV Module might not have created a plausible impression or the speech of an external speaker has stronger implications for the perception of presence. Evaluation experiments of the POV Module which have been performed before the experiment started [ Poer01 ] have shown that a natural impression of the perception of one's own voice is created by the POV Module. Hence it can be concluded that the perception of an external speaker creates a higher degree of presence than the plausible perception of the own voice. This is reasonable because an external speaker gives the impression to the listener that someone else is present in the room which is not the case when listening to one's own speech.

Furthermore, the simulation of reflections in an auditory virtual environment increases the perceived presence. However, the importance of reflections is less for the own voice because the level difference between direct sound and reflections is usually much higher than for external sound sources. Additionally, the influence of head movements is less for the own voice as only the reflections but not the airborne and the bone-conducted sound are altered.

The results presented in this study can be regarded as a starting point for a sound designer to create virtual worlds which produce comfort as well as presence for the listener.

The described experiments were carried out at the CNRS in Marseille, in the framework of the PROCOPE bilateral cooperation between the Ruhr-Universität of Bochum and the LMA at the Centre National de la Recherche Scientifique in Marseille. The authors would like to thank the colleagues at the CNRS for their cooperation and the possibility to use their laboratories performing the experiments at the CNRS research facilities in Marseille.

The authors also sincerely thanks the anonymous reviewers for their suggestions to improve this article.

[Bal07] Self-produced Sound: Tightly Binding Haptics and Audio, Haptic and Audio Interaction Design , 2nd Int. Workshop, Seoul, South Korea, 2007, pp. 1—8, isbn 3-540-76701-0.

[BM86] Graphic Scaling of Quantitative Terms, Society of Motion Picture and Television Engineers (SMPTE) Journal, (1986), 1166—1171.

[DPS00] An Interactive Virtual-Environment Generator for Psychoacoustic Research: Collection of Head-Related Impulse Responses and Assessment of Auditory Localization and Localization Blur, ACUSTICA/Acta acustica, (2000), 1046—1053, issn 1610-1928.

[Fon92] The Experience of a Sense of Presence in Intercultural and International Encounters, Presence, (1992), no. 4, 482—490, issn 1054-7460.

[GW95] The sense of presence for the suddenly deafened adult, Presence, (1995), no. 1, 357—363, issn 1054-7460.

[HEK91] Lehr- und Handbuch der angewandten Statistik [text and reference book of applied statistics] , R. Oldenbourg Verlag GmbH, 1991, isbn 3-486-22055-1.

[HKP99] Design and Applications of a Data-based Auralization System for Surround Sound, 106th AES Convention Munich, 1999, Preprint 4976.

[KZK04] Auditory cues in the perception of self-motion, 116th AES Convention Berlin, 2004, Preprint 6078.

[LB92] Principles of binaural room simulation, Applied Acoustics, (1992), number 3/4, 252—291.

[LG95] Vocal Communication in Virtual Environments, Virtual Reality World, 1995, pp. 279—293.

[MFT99] Binaural room scanning - a new tool for acoustic and psychoacoustic research, J. Acoust. Soc. Am., (1999), no. 2, 1343—1344, issn 0001-4966.

[MFT00] Head-tracker based auralization systems: Additional Consideration of vertical head movements, 108th AES Convention Paris, 2000, Preprint 5135.

[Moel00] Assessment and Prediction of Speech Quality in Telecommunications, Kluwer Academic Publ., Boston MA, 2000, isbn 9780792378945.

[Nor05] Self-induced Footsteps Sounds in Virtual Reality: Latency, Recognition, Quality and Presence, M. Slater (Ed.), Proceedings of Presence, 8th International Workshop on Presence, 2005, pp. 353—355, isbn 978-0-79237-894-5.

[Pel00] Perception-based room-rendering for auditory scenes, 109th AES Convention Los Angeles, 2000, Preprint 5229.

[Pel02] A Virtual Reference Listening Room as an Application of Auditory Virtual Environments - Ein virtueller Referenz-Abhörraum als Anwendung auditiver, virtueller Umgebungen, dissertation.de - Verlag im Internet, Berlin, 2002, isbn 3-89825-403-1.

[Pom93] Psychoakustische Verifikation von Computermodellen zur binauralen Raumsimulation [Psychoacoustic verification of computer models for binaural room simulation] , Shaker Verlag, Aachen, 1993.

[Poer00] Influences of Bone Conduction and Air Conduction on the Sound of One's Own Voice, ACUSTICA/Acta acustica, (2000), 1038—1045, Issn 1610-1928.

[Poer01] One's Own Voice in Auditory Virtual Environments, ACUSTICA/Acta acustica, (2001), 378—388, issn 1610-1928.

[Poes86] Einfluss von Knochenschall auf die Schalldämmung von Gehörschützern [Influence of bone conduction on the sound attenuation of hearing protectors], Schriftenreihe der Bundesanstalt für Arbeitsschutz, Wirtschaftsverlag NW, Bremerhaven, 1986, isbn 3-88314-484-3.

[RSC05] Influence of auditory cues on the visually-induced self-motion illusion (circular vection) in virtual reality, M. Slater (Ed.), Proceedings of Presence, 8th International Workshop on Presence, 2005, pp. 49—57, isbn 978-0-79237-894-5.

[She92a] Defining our Terms, Presence, (1992), no. 2, 272—274, issn 1054-7460.

[She92b] Musings on Telepresence and Virtual Presence, Presence, (1992), no. 1, 120—125, issn 1054-7460.

[VLV08] Musings on Telepresence and Virtual Presence, Presence, (2008), no. 1, 43—56, issn 1054-7460.

[WS98] Measuring Presence in Virtual Environments: A Presence Questionaire, Presence, (1998), no. 3, 225—240, issn 1054-7460.

Fulltext ¶

-

Volltext als PDF

(

Size

432.7 kB

)

Volltext als PDF

(

Size

432.7 kB

)

License ¶

Any party may pass on this Work by electronic means and make it available for download under the terms and conditions of the Digital Peer Publishing License. The text of the license may be accessed and retrieved at http://www.dipp.nrw.de/lizenzen/dppl/dppl/DPPL_v2_en_06-2004.html.

Recommended citation ¶

Christoph Pörschmann, and Renato S. Pellegrini, 3-D Audio in Mobile Communication Devices: Effects of Self-Created and External Sounds on Presence in Auditory Virtual Environments. JVRB - Journal of Virtual Reality and Broadcasting, 7(2010), no. 11. (urn:nbn:de:0009-6-26798)

Please provide the exact URL and date of your last visit when citing this article.